春のパン祭り点数文字認識処理を調整していますが、なかなか完璧にはいかず…

調整は今回までにして、実際のアプリケーションを作っていく方向に進めたいと思います。

今回変更したこと

色々調整していて、以下変更しました。

- 数字テンプレートとする輪郭データを、

cv2.minAreaRect()での角度を元にまっすぐに直しておく。(効果はあまり出なかったが、処理を整理できたので、この変更版処理を使っておきたい) - ICP処理の中で、最近傍点探索処理があったが、この高速化を行った。

- 点数文字認識処理の区切り方を修正した。

- 一致度計算では、

cv2.matchTemplate()関数を使っていたが、2つのサイズの一致した2値画像を比較したい、というだけなので、それに合わせた処理に変更。パフォーマンスは確認していないが、速くなっているんではないかと。

今まで作成した処理

色々と変更しながらやってきたので、現状のバージョンを以下並べていきます。

ライブラリインポート、画像データ読み込み

import cv2 import numpy as np %matplotlib inline from matplotlib import pyplot as plt import math import copy import random img1 = cv2.imread('harupan_190428_1.jpg') img2 = cv2.imread('harupan_190428_2.jpg') img3 = cv2.imread('harupan_200317_1.jpg') img4 = cv2.imread('harupan_210227_2.jpg') img5 = cv2.imread('harupan_210402_1.jpg') img6 = cv2.imread('harupan_210402_2.jpg') img7 = cv2.imread('harupan_210414_1.jpg')

点数文字候補輪郭の検出

def detect_candidate_contours(image, res_th=800): h, w, chs = image.shape if h > res_th or w > res_th: k = float(res_th)/h if w > h else float(res_th)/w else: k = 1.0 img = cv2.resize(image, None, fx=k, fy=k, interpolation=cv2.INTER_AREA) hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV) # Convert hue value (rotation, mask by saturation) hsv[:,:,0] = np.where(hsv[:,:,0] < 50, hsv[:,:,0]+180, hsv[:,:,0]) hsv[:,:,0] = np.where(hsv[:,:,1] < 100, 0, hsv[:,:,0]) # Thresholding with cv2.inRange() th_hue = cv2.inRange(hsv[:,:,0], 135, 190) # Retrieve all points on the contours (cv2.CHAIN_APPROX_NONE) contours, hierarchy = cv2.findContours(th_hue, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE) indices0 = [i for i,hier in enumerate(hierarchy[0,:,:]) if hier[3] == -1] indices1 = [i for i,hier in enumerate(hierarchy[0,:,:]) if hier[3] in indices0] contours1 = [contours[i] for i in indices1] contours1_filtered = [ctr for ctr in contours1 if cv2.contourArea(ctr) > float(res_th)*float(res_th)/4000] return contours1_filtered, img

補助処理

- 輪郭周辺の小画像作成

輪郭周辺の画像領域の切り出し、画像と輪郭自体の原点もその領域の原点に直す。

比較対象データの作成が一つの目的、もう一つの目的はデバッグ。 - 輪郭の塗りつぶし画像作成

テンプレートデータ作成用、比較用データ作成用

def create_contour_area_image(img, ctr): x,y,w,h = cv2.boundingRect(ctr) rtn_img = img[y:y+h,x:x+w,:].copy() rtn_ctr = ctr.copy() origin = np.array([x,y]) for c in rtn_ctr: c[0,:] -= origin return rtn_img, rtn_ctr # ctr: Should be output of create_contour_area_image() (Origin of points is the origin of bounding box) # img_shape: Optional, tuple of (image_height, image_width), if omitted, calculated from ctr def create_solid_contour(ctr, img_shape=(int(0),int(0))): if img_shape == (int(0),int(0)): _,_,w,h = cv2.boundingRect(ctr) else: h,w = img_shape img = np.zeros((h,w), 'uint8') img = cv2.drawContours(img, [ctr], -1, 255, -1) return img # ctr: Should be output of create_contour_area_image() (Origin of points is the origin of bounding box) def create_upright_solid_contour(ctr): (cx,cy),(w,h),angle = cv2.minAreaRect(ctr) M = cv2.getRotationMatrix2D((cx,cy), angle, 1) for i in range(ctr.shape[0]): ctr[i,0,:] = ( M @ np.array([ctr[i,0,0], ctr[i,0,1], 1]) ).astype('int') rect = cv2.boundingRect(ctr) img = np.zeros((rect[3],rect[2]), 'uint8') ctr -= rect[0:2] M[:,2] -= rect[0:2] img = cv2.drawContours(img, [ctr], -1, 255,-1) return img, M, ctr

点数文字認識処理の整理

データセット

各輪郭について、処理の中で何度か使われる生成データ。

クラスでまとめておく。

class contour_dataset: def __init__(self, ctr): self.ctr = ctr.copy() self.rrect = cv2.minAreaRect(ctr) self.box = cv2.boxPoints(self.rrect) self.solid = create_solid_contour(ctr) self.pts = np.array([p for p in ctr[:,0,:]]) class template_dataset: def __init__(self, ctr, num, selected_idx=[0]): self.ctr = ctr.copy() self.num = num self.rrect = cv2.minAreaRect(ctr) self.box = cv2.boxPoints(self.rrect) if num == 0: self.solid,_,_ = create_upright_solid_contour(ctr) else: self.solid = create_solid_contour(ctr) self.pts = np.array([ctr[idx,0,:] for idx in selected_idx])

ICP処理

補助処理と本体。

- 最近傍点探索処理は高速化版。

2点間の距離を計算していましたが、そうするとルート計算が入って計算が重くなるかと。

距離の2乗の計算であれば計算が軽く、また、大小比較をするだけなら2乗したものでも問題ないので、そのように変更しました。

# pts: list of 2D points, or ndarray of shape (n,2) # query: 2D point to find nearest neighbor def find_nearest_neighbor(pts, query): min_distance_sq = float('inf') min_idx = 0 for i, p in enumerate(pts): d = np.dot(query - p, query - p) if(d < min_distance_sq): min_distance_sq = d min_idx = i return min_idx, np.sqrt(min_distance_sq) # src, dst: ndarray, shape is (n,2) (n: number of points) def estimate_affine_2d(src, dst): n = min(src.shape[0], dst.shape[0]) x = dst[0:n].flatten() A = np.zeros((2*n,6)) for i in range(n): A[i*2,0] = src[i,0] A[i*2,1] = src[i,1] A[i*2,2] = 1 A[i*2+1,3] = src[i,0] A[i*2+1,4] = src[i,1] A[i*2+1,5] = 1 M = np.linalg.inv(A.T @ A) @ A.T @ x return M.reshape([2,3]) # Find optimum affine matrix using ICP algorithm # src_pts: ndarray, shape is (n_s,2) (n_s: number of points) # dst_pts: ndarray, shape is (n_d,2) (n_d: number of points, n_d should be larger or equal to n_s) # initial_matrix: ndarray, shape is (2,3) def icp(src_pts, dst_pts, max_iter=20, initial_matrix=np.array([[1.0, 0.0, 0.0], [0.0, 1.0, 0.0]])): default_affine_matrix = np.array([[1.0, 0.0, 0.0], [0.0, 1.0, 0.0]]) if dst_pts.shape[0] < src_pts.shape[0]: print("icp: Insufficient destination points") return default_affine_matrix, False if initial_matrix.shape != (2,3): print("icp: Illegal shape of initial_matrix") return default_affine_matrix, False M = initial_matrix # Store indices of the nearest neighbor point of dst_pts to the converted point of src_pts nn_idx = [] for i in range(max_iter): nn_idx_tmp = [] dst_pts_list = [p for p in dst_pts] idx_list = list(range(0,dst_pts.shape[0])) for p in src_pts: p2 = M @ np.array([p[0], p[1], 1]) idx, d = find_nearest_neighbor(dst_pts_list, p2) nn_idx_tmp += [idx_list[idx]] del dst_pts_list[idx] del idx_list[idx] if nn_idx != [] and nn_idx == nn_idx_tmp: break dst_pts2 = np.zeros_like(src_pts) for j,idx in enumerate(nn_idx_tmp): dst_pts2[j,:] = dst_pts[idx,:] M = estimate_affine_2d(src_pts, dst_pts2) nn_idx = nn_idx_tmp if i == max_iter -1: return M, False return M, True

一致度計算処理

今まで使っていたものから少しバグ修正(デバッグ用に返す画像が間違っていた)があるぐらい。

def binary_image_similarity(img1, img2): if img1.shape != img2.shape: print('binary_image_similarity: Different image size') return 0.0 xor_img = cv2.bitwise_xor(img1, img2) return 1.0 - np.float(np.count_nonzero(xor_img)) / (img1.shape[0]*img2.shape[1]) # src, dst: contour_dataset or template_dataset (holding member variables box, solid) def get_transform_by_rotated_rectangle(src, dst): # Rotated patterns are created when starting index is slided dst_box2 = np.vstack([dst.box, dst.box]) max_similarity = 0.0 max_converted_img = np.zeros((dst.solid.shape[1], dst.solid.shape[0]), 'uint8') for i in range(4): M = cv2.getAffineTransform(src.box[0:3], dst_box2[i:i+3]) converted_img = cv2.warpAffine(src.solid, M, dsize=(dst.solid.shape[1], dst.solid.shape[0]), flags=cv2.INTER_NEAREST) similarity = binary_image_similarity(converted_img, dst.solid) if similarity > max_similarity: M_rtn = M max_similarity = similarity max_converted_img = converted_img return M_rtn, max_similarity, max_converted_img def get_similarity_with_template(target_data, template_data, sim_th_high=0.95, sim_th_low=0.7): _,(w1,h1), _ = target_data.rrect _,(w2,h2), _ = template_data.rrect r = w1/h1 if w1 < h1 else h1/w1 r = r * h2/w2 if w2 < h2 else r * w2/h2 M, sim_init, _ = get_transform_by_rotated_rectangle(template_data, target_data) if sim_init > sim_th_high or sim_init < sim_th_low or r > 1.4 or r < 0.7: dsize = (template_data.solid.shape[1], template_data.solid.shape[0]) flags = cv2.INTER_NEAREST|cv2.WARP_INVERSE_MAP converted_img = cv2.warpAffine(target_data.solid, M, dsize=dsize, flags=flags) return sim_init, converted_img M, _ = icp(template_data.pts, target_data.pts, initial_matrix=M) Minv = cv2.invertAffineTransform(M) converted_ctr = np.zeros_like(target_data.ctr) for i in range(target_data.ctr.shape[0]): converted_ctr[i,0,:] = (Minv[:,0:2] @ target_data.ctr[i,0,:]) + Minv[:,2] converted_img = create_solid_contour(converted_ctr, img_shape=template_data.solid.shape) val = binary_image_similarity(converted_img, template_data.solid) return val, converted_img def get_similarity_with_template_zero(target_data, template_data): dsize = (template_data.solid.shape[1], template_data.solid.shape[0]) converted_img = cv2.resize(target_data.solid, dsize=dsize, interpolation=cv2.INTER_NEAREST) val = binary_image_similarity(converted_img, template_data.solid) return val, converted_img def get_similarities(target, templates): similarities = [] converted_imgs = [] for tmpl in templates: if tmpl.num == 0: sim,converted_img = get_similarity_with_template_zero(target, tmpl) else: sim,converted_img = get_similarity_with_template(target, tmpl) similarities += [sim] converted_imgs += [converted_img] return similarities, converted_imgs # target: Single contour to compare # templates: List of template_dataset (for numbers 0, 1, 2, 3, 5) # svm: Trained SVM # return: determined number (0,1,2,3,5), -1 if none corresponds def determine_number(target, templates, svm): similarities,_ = get_similarities(target, templates) _, result = svm.predict(np.array(similarities)) return int(result[0])

SVM学習関連処理

学習用データのサンプルでは、同じ状況の再現のため、乱数のシードを指定できるようにしました。

def get_random_sample(data_in, labels_in, selected_labels, n_samples, seed=None): random.seed(seed) data_rtn = [] labels_rtn = [] for lab in selected_labels: samples = [d for i,d in enumerate(data_in) if labels_in[i]==lab] n = min(n_samples, len(samples)) data_rtn += random.sample(samples, n) labels_rtn += [lab] * n return data_rtn, labels_rtn def prepare_svm(train_data, train_labels): svm = cv2.ml.SVM_create() svm.setKernel(cv2.ml.SVM_LINEAR) svm.setType(cv2.ml.SVM_C_SVC) svm.setC(100) svm.setGamma(1) svm.train(np.array(train_data, 'float32'), cv2.ml.ROW_SAMPLE, np.array(train_labels)) return svm def print_stat(svm_results, svm_labels): stats = {k:{k2:0 for k2 in [-1, 0, 1, 2, 3, 5]} for k in [-1, 0, 1, 2, 3, 5]} for res, lab in zip(svm_results[1], svm_labels): stats[lab][int(res[0])] += 1 for k,v in stats.items(): print('label {:>2}'.format(k), ': {', end='') for k2,v2 in v.items(): print('{}: {:>2}, '.format(k2,v2), end='') print('}') def print_similarity_vector(sim, end=''): print('[',end='') for s in sim: print('{:.3f}, '.format(s), end='') print(']', end=end)

データの用意

ここから、実データからの必要なデータ取り出しを行っていきます。

一部のデータは、後で再利用のために保存します。

輪郭データの取得

original_imgs = [img1, img2, img3, img4, img5, img6, img7] resized_imgs = [] resized_ctrs = [] original_img_idx = [] subctrs_all = [] subimgs_all = [] for idx, original_img in enumerate(original_imgs): ctrs, img = detect_candidate_contours(original_img) resized_imgs += [img] resized_ctrs += [ctrs] for ctr in ctrs: original_img_idx += [idx] subimg,subctr = create_contour_area_image(img, ctr) subctrs_all += [subctr] subimgs_all += [subimg]

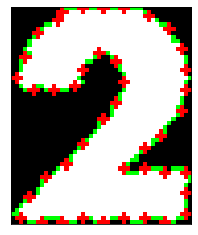

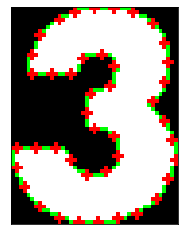

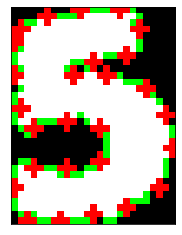

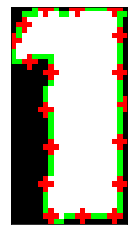

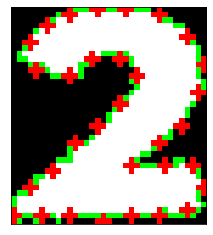

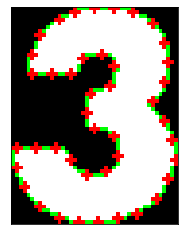

テンプレートデータの用意

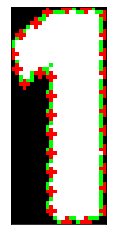

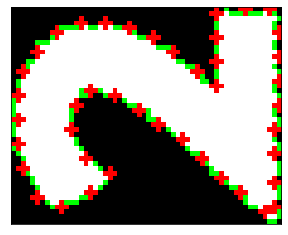

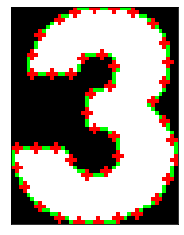

今までやっていた通り、3つ目の画像(2020年)、5つ目の画像(2021年)では、"3点"のデータがないので、1つ目の画像(2019年)のデータで代用します。

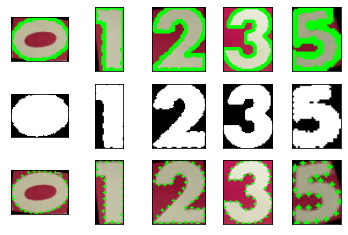

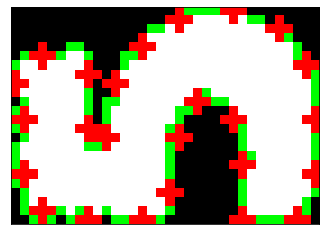

ctrs1_idx_zero = 26 ctrs1_idx_one = 27 ctrs1_idx_two = 24 ctrs1_idx_three = 33 ctrs1_idx_five = 8 ctrs1_idx_numbers = [ctrs1_idx_zero, ctrs1_idx_one, ctrs1_idx_two, ctrs1_idx_three, ctrs1_idx_five] subimgs1 = [] subctrs1 = [] binimgs1 = [] subctrs1_selected_pts = [] for i,idx in enumerate(ctrs1_idx_numbers): img, ctr = create_contour_area_image(resized_imgs[0], resized_ctrs[0][idx]) binimg, M, ctr2 = create_upright_solid_contour(ctr) img2 = cv2.warpAffine(img.copy(), M, (binimg.shape[1], binimg.shape[0])) subimgs1 += [img2] subctrs1 += [ctr2] binimgs1 += [binimg] ctr_selected_pts = [j for j in range(ctr2.shape[0]) if j % 5 == 0] if i != 0: subctrs1_selected_pts += [ctr_selected_pts] ctr_img = cv2.drawContours(img2.copy(), [ctr2], -1, (0,255,0), 2) pts_img = img2.copy() for p in ctr_selected_pts: pts_img = cv2.drawMarker(pts_img, ctr2[p,0,:], (0,255,0), markerType=cv2.MARKER_CROSS, markerSize=3) plt.subplot(3,5,1+i), plt.imshow(cv2.cvtColor(ctr_img, cv2.COLOR_BGR2RGB)), plt.xticks([]), plt.yticks([]) plt.subplot(3,5,6+i), plt.imshow(binimg,cmap='gray'), plt.xticks([]), plt.yticks([]) plt.subplot(3,5,11+i), plt.imshow(cv2.cvtColor(pts_img, cv2.COLOR_BGR2RGB), cmap='gray'), plt.xticks([]), plt.yticks([]) plt.show()

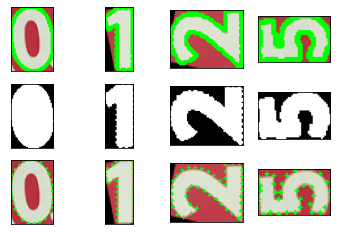

ctrs3_idx_zero = 7 ctrs3_idx_one = 4 ctrs3_idx_two = 17 ctrs3_idx_five = 6 ctrs3_idx_numbers = [ctrs3_idx_zero, ctrs3_idx_one, ctrs3_idx_two, ctrs3_idx_five] subimgs3 = [] subctrs3 = [] binimgs3 = [] subctrs3_selected_pts = [] for i,idx in enumerate(ctrs3_idx_numbers): img, ctr = create_contour_area_image(resized_imgs[2], resized_ctrs[2][idx]) binimg, M, ctr2 = create_upright_solid_contour(ctr) img2 = cv2.warpAffine(img.copy(), M, (binimg.shape[1], binimg.shape[0])) subimgs3 += [img2] subctrs3 += [ctr2] binimgs3 += [binimg] ctr_selected_pts = [j for j in range(ctr2.shape[0]) if j% 5 == 0] if i != 0: subctrs3_selected_pts += [ctr_selected_pts] ctr_img = cv2.drawContours(img2.copy(), [ctr2], -1, (0,255,0), 2) pts_img = img2.copy() for p in ctr_selected_pts: pts_img = cv2.drawMarker(pts_img, ctr2[p,0,:], (0,255,0), markerType=cv2.MARKER_CROSS, markerSize=3) plt.subplot(3,4,1+i), plt.imshow(cv2.cvtColor(ctr_img, cv2.COLOR_BGR2RGB)), plt.xticks([]), plt.yticks([]) plt.subplot(3,4,5+i), plt.imshow(binimg,cmap='gray'), plt.xticks([]), plt.yticks([]) plt.subplot(3,4,9+i), plt.imshow(cv2.cvtColor(pts_img, cv2.COLOR_BGR2RGB)), plt.xticks([]), plt.yticks([]) plt.show() subimgs3.insert(3, subimgs1[3]) subctrs3.insert(3, subctrs1[3]) binimgs3.insert(3, binimgs1[3]) subctrs3_selected_pts.insert(2, subctrs1_selected_pts[2])

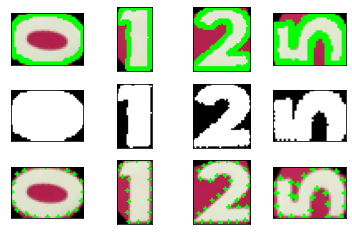

ctrs5_idx_zero = 3 ctrs5_idx_one = 4 ctrs5_idx_two = 2 ctrs5_idx_five = 5 ctrs5_idx_numbers = [ctrs5_idx_zero, ctrs5_idx_one, ctrs5_idx_two, ctrs5_idx_five] subimgs5 = [] subctrs5 = [] binimgs5 = [] subctrs5_selected_pts = [] for i,idx in enumerate(ctrs5_idx_numbers): img, ctr = create_contour_area_image(resized_imgs[4], resized_ctrs[4][idx]) binimg, M, ctr2 = create_upright_solid_contour(ctr) img2 = cv2.warpAffine(img.copy(), M, (binimg.shape[1], binimg.shape[0])) subimgs5 += [img2] subctrs5 += [ctr2] binimgs5 += [binimg] ctr_selected_pts = [j for j in range(ctr2.shape[0]) if j % 5 == 0] if i != 0: subctrs5_selected_pts += [ctr_selected_pts] ctr_img = cv2.drawContours(img2.copy(), [ctr2], -1, (0,255,0), 2) pts_img = img2.copy() for p in ctr_selected_pts: pts_img = cv2.drawMarker(pts_img, ctr2[p,0,:], (0,255,0), markerType=cv2.MARKER_CROSS, markerSize=3) plt.subplot(3,4,1+i), plt.imshow(cv2.cvtColor(ctr_img, cv2.COLOR_BGR2RGB)), plt.xticks([]), plt.yticks([]) plt.subplot(3,4,5+i), plt.imshow(binimg,cmap='gray'), plt.xticks([]), plt.yticks([]) plt.subplot(3,4,9+i), plt.imshow(cv2.cvtColor(pts_img, cv2.COLOR_BGR2RGB)), plt.xticks([]), plt.yticks([]) plt.show() subimgs5.insert(3, subimgs1[3]) subctrs5.insert(3, subctrs1[3]) binimgs5.insert(3, binimgs1[3]) subctrs5_selected_pts.insert(2, subctrs1_selected_pts[2])

正解ラベル

labels1 = [-1,-1,-1,-1,-1 ,-1,5,0,5,1 ,5,0,2,1,2 ,-1,-1,1,1,5 ,0,2,5,0,2 ,5,0,1,2,-1 ,5,1,2,3,1 ,5,0,-1] labels2 = [-1,-1,-1,-1,-1 ,-1,5,0,5,1 ,5,0,2,1,2 ,-1,-1,1,1,5 ,0,-1,2,5,0 ,2,5,0,1,2 ,5,0,1,-1,2 ,-1,-1,-1,3,-1 ,5,0,-1,1,-1 ,-1] labels3 = [-1,-1,-1,-1,1 ,1,5,0,1,1 ,5,0,5,0,-1 ,-1,-1,2,-1,-1 ,-1,1,1,1,-1 ,1,-1,-1,1,1 ,-1,2,-1,1,-1 ,1,2,-1,1,-1 ,-1,2,5,-1,0 ,-1,1,1] labels4 = [-1,-1,-1,-1,-1 ,-1,-1,-1,-1,-1 ,-1,-1,-1,-1,-1 ,-1,-1,-1,1,1 ,1,1,1,1,1 ,-1,5,0,2,5 ,0,2,1,2,2 ,-1,-1,-1,1,1 ,1] labels5 = [-1,-1,2,0,1 ,5,-1,1,1,1 ,1,1,1,1,1 ,1,-1,5,1,0 ,5,1,2,0,5 ,0,2,1,2,2 ,-1,-1,1,1,1 ] labels6 = [-1,0,1,5,2 ,-1,1,1,1,1 ,5,1,0,5,0 ,2,1,5,0,2 ,2,2,1,-1,-1 ,1,1,1,1,1 ,1,1,1] labels7 = [-1,-1,-1,-1,-1 ,-1,1,2,2,2 ,2,1,2,2,2 ,1,-1,-1,-1,2 ,1,2,1,1] labels_all = labels1 + labels2 + labels3 + labels4 + labels5 + labels6 + labels7

データセットの作成

# Prepare template data for "0" templates1 = [template_dataset(subctrs1[0], 0)] templates3 = [template_dataset(subctrs3[0], 0)] templates5 = [template_dataset(subctrs5[0], 0)] # Prepare template data for other numbers numbers = [1, 2, 3, 5] for i,num in enumerate(numbers): templates1 += [template_dataset(subctrs1[i+1], num, subctrs1_selected_pts[i])] templates3 += [template_dataset(subctrs3[i+1], num, subctrs3_selected_pts[i])] templates5 += [template_dataset(subctrs5[i+1], num, subctrs5_selected_pts[i])] ctr_datasets_all = [contour_dataset(ctr) for ctr in subctrs_all]

一致度計算実施

templates_sel = [1,1,3,5,5,5,5] def select_template(i): img_idx = original_img_idx[i] if templates_sel[img_idx] == 1: return templates1 elif templates_sel[img_idx] == 3: return templates3 elif templates_sel[img_idx] == 5: return templates5 else: return templates1 similarities_all = [] converted_imgs_all = [] print(' Contour No. ', end='') for i,target_ctr in enumerate(ctr_datasets_all): templates = select_template(i) print(i, ' ', end='') sims, imgs = get_similarities(target_ctr, templates) similarities_all += [sims] converted_imgs_all += [imgs]

Contour No. 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264

得られた一致度を全て表示しておきます。

長くなっちゃいますが…

for sim, lab in zip(similarities_all, labels_all): print('label {:>2}'.format(lab), ': ', end='') print_similarity_vector(sim, end='\n')

label -1 : [0.825, 0.879, 0.666, 0.649, 0.710, ]

label -1 : [0.820, 0.783, 0.658, 0.667, 0.699, ]

label -1 : [0.841, 0.757, 0.684, 0.676, 0.733, ]

label -1 : [0.816, 0.730, 0.676, 0.699, 0.731, ]

label -1 : [0.844, 0.765, 0.691, 0.708, 0.738, ]

label -1 : [0.860, 0.746, 0.697, 0.681, 0.736, ]

label 5 : [0.717, 0.816, 0.685, 0.812, 0.919, ]

label 0 : [0.868, 0.836, 0.608, 0.679, 0.731, ]

label 5 : [0.746, 0.748, 0.672, 0.792, 0.950, ]

label 1 : [0.729, 0.942, 0.766, 0.743, 0.731, ]

label 5 : [0.731, 0.774, 0.668, 0.781, 0.921, ]

label 0 : [0.942, 0.762, 0.695, 0.669, 0.728, ]

label 2 : [0.660, 0.753, 0.937, 0.754, 0.724, ]

label 1 : [0.744, 0.947, 0.710, 0.702, 0.686, ]

label 2 : [0.650, 0.777, 0.957, 0.754, 0.702, ]

label -1 : [0.682, 0.747, 0.643, 0.630, 0.647, ]

label -1 : [0.796, 0.672, 0.715, 0.783, 0.878, ]

label 1 : [0.709, 0.952, 0.801, 0.784, 0.755, ]

label 1 : [0.625, 0.955, 0.834, 0.807, 0.805, ]

label 5 : [0.732, 0.759, 0.663, 0.786, 0.918, ]

label 0 : [0.967, 0.722, 0.670, 0.659, 0.732, ]

label 2 : [0.665, 0.715, 0.945, 0.751, 0.682, ]

label 5 : [0.719, 0.680, 0.691, 0.801, 0.898, ]

label 0 : [0.963, 0.722, 0.678, 0.664, 0.728, ]

label 2 : [0.670, 0.732, 0.963, 0.754, 0.697, ]

label 5 : [0.750, 0.693, 0.661, 0.674, 0.913, ]

label 0 : [0.895, 0.734, 0.684, 0.629, 0.752, ]

label 1 : [0.744, 0.954, 0.740, 0.708, 0.706, ]

label 2 : [0.681, 0.720, 0.942, 0.750, 0.692, ]

label -1 : [0.820, 0.738, 0.691, 0.713, 0.750, ]

label 5 : [0.671, 0.761, 0.653, 0.610, 0.784, ]

label 1 : [0.699, 0.953, 0.711, 0.688, 0.718, ]

label 2 : [0.621, 0.812, 0.958, 0.745, 0.736, ]

label 3 : [0.701, 0.664, 0.773, 1.000, 0.669, ]

label 1 : [0.542, 0.959, 0.837, 0.809, 0.818, ]

label 5 : [0.737, 0.728, 0.704, 0.782, 0.924, ]

label 0 : [0.865, 0.806, 0.655, 0.669, 0.721, ]

label -1 : [0.690, 0.781, 0.546, 0.677, 0.695, ]

label -1 : [0.840, 0.879, 0.677, 0.662, 0.765, ]

label -1 : [0.827, 0.787, 0.696, 0.688, 0.737, ]

label -1 : [0.844, 0.770, 0.645, 0.645, 0.705, ]

label -1 : [0.829, 0.750, 0.676, 0.751, 0.701, ]

label -1 : [0.862, 0.735, 0.684, 0.691, 0.728, ]

label -1 : [0.855, 0.758, 0.664, 0.664, 0.732, ]

label 5 : [0.709, 0.806, 0.687, 0.792, 0.901, ]

label 0 : [0.879, 0.821, 0.640, 0.667, 0.724, ]

label 5 : [0.734, 0.762, 0.677, 0.706, 0.928, ]

label 1 : [0.728, 0.941, 0.771, 0.757, 0.753, ]

label 5 : [0.726, 0.777, 0.675, 0.730, 0.931, ]

label 0 : [0.932, 0.782, 0.628, 0.660, 0.724, ]

label 2 : [0.657, 0.751, 0.946, 0.752, 0.731, ]

label 1 : [0.748, 0.936, 0.737, 0.708, 0.705, ]

label 2 : [0.636, 0.782, 0.956, 0.758, 0.714, ]

label -1 : [0.780, 0.648, 0.735, 0.787, 0.825, ]

label -1 : [0.687, 0.727, 0.642, 0.646, 0.660, ]

label 1 : [0.708, 0.943, 0.796, 0.778, 0.749, ]

label 1 : [0.618, 0.952, 0.836, 0.811, 0.812, ]

label 5 : [0.734, 0.775, 0.682, 0.785, 0.920, ]

label 0 : [0.962, 0.708, 0.666, 0.664, 0.727, ]

label -1 : [0.778, 0.783, 0.687, 0.701, 0.737, ]

label 2 : [0.663, 0.722, 0.943, 0.748, 0.681, ]

label 5 : [0.739, 0.666, 0.692, 0.812, 0.904, ]

label 0 : [0.962, 0.704, 0.672, 0.665, 0.724, ]

label 2 : [0.668, 0.736, 0.951, 0.746, 0.700, ]

label 5 : [0.750, 0.693, 0.653, 0.675, 0.922, ]

label 0 : [0.905, 0.707, 0.662, 0.630, 0.752, ]

label 1 : [0.745, 0.940, 0.738, 0.712, 0.701, ]

label 2 : [0.685, 0.718, 0.946, 0.755, 0.680, ]

label 5 : [0.750, 0.675, 0.710, 0.794, 0.915, ]

label 0 : [0.827, 0.785, 0.669, 0.627, 0.743, ]

label 1 : [0.695, 0.945, 0.717, 0.692, 0.705, ]

label -1 : [0.775, 0.859, 0.708, 0.691, 0.725, ]

label 2 : [0.610, 0.814, 0.959, 0.748, 0.742, ]

label -1 : [0.798, 0.863, 0.684, 0.697, 0.788, ]

label -1 : [0.805, 0.851, 0.696, 0.675, 0.733, ]

label -1 : [0.786, 0.765, 0.675, 0.659, 0.686, ]

label 3 : [0.708, 0.671, 0.767, 0.969, 0.668, ]

label -1 : [0.942, 0.719, 0.693, 0.695, 0.736, ]

label 5 : [0.750, 0.737, 0.693, 0.781, 0.897, ]

label 0 : [0.845, 0.789, 0.660, 0.674, 0.745, ]

label -1 : [0.802, 0.805, 0.702, 0.701, 0.749, ]

label 1 : [0.533, 0.944, 0.835, 0.813, 0.818, ]

label -1 : [0.796, 0.821, 0.687, 0.696, 0.698, ]

label -1 : [0.776, 0.824, 0.753, 0.714, 0.738, ]

label -1 : [0.824, 0.699, 0.615, 0.639, 0.693, ]

label -1 : [0.694, 0.682, 0.633, 0.664, 0.656, ]

label -1 : [0.868, 0.735, 0.673, 0.691, 0.761, ]

label -1 : [0.825, 0.735, 0.673, 0.680, 0.761, ]

label 1 : [0.691, 0.954, 0.762, 0.784, 0.729, ]

label 1 : [0.668, 0.947, 0.743, 0.743, 0.731, ]

label 5 : [0.765, 0.711, 0.683, 0.706, 0.938, ]

label 0 : [1.000, 0.710, 0.650, 0.628, 0.756, ]

label 1 : [0.646, 0.947, 0.714, 0.707, 0.692, ]

label 1 : [0.666, 0.945, 0.743, 0.732, 0.731, ]

label 5 : [0.679, 0.768, 0.687, 0.752, 0.929, ]

label 0 : [0.843, 0.753, 0.688, 0.635, 0.697, ]

label 5 : [0.759, 0.717, 0.690, 0.681, 0.934, ]

label 0 : [0.956, 0.690, 0.633, 0.625, 0.690, ]

label -1 : [0.825, 0.811, 0.735, 0.761, 0.692, ]

label -1 : [0.811, 0.700, 0.636, 0.668, 0.719, ]

label -1 : [0.793, 0.667, 0.632, 0.641, 0.730, ]

label 2 : [0.650, 0.785, 0.979, 0.762, 0.705, ]

label -1 : [0.784, 0.751, 0.686, 0.691, 0.701, ]

label -1 : [0.847, 0.729, 0.668, 0.679, 0.733, ]

label -1 : [0.808, 0.697, 0.650, 0.660, 0.719, ]

label 1 : [0.741, 0.940, 0.738, 0.715, 0.674, ]

label 1 : [0.671, 0.944, 0.730, 0.717, 0.691, ]

label 1 : [0.670, 0.941, 0.730, 0.721, 0.698, ]

label -1 : [0.797, 0.747, 0.689, 0.692, 0.734, ]

label 1 : [0.685, 0.945, 0.718, 0.695, 0.685, ]

label -1 : [0.822, 0.713, 0.644, 0.692, 0.706, ]

label -1 : [0.814, 0.667, 0.624, 0.650, 0.726, ]

label 1 : [0.706, 0.952, 0.716, 0.713, 0.690, ]

label 1 : [0.639, 0.954, 0.687, 0.689, 0.674, ]

label -1 : [0.798, 0.671, 0.620, 0.654, 0.749, ]

label 2 : [0.662, 0.711, 0.951, 0.753, 0.651, ]

label -1 : [0.811, 0.718, 0.688, 0.723, 0.721, ]

label 1 : [0.639, 0.960, 0.683, 0.690, 0.674, ]

label -1 : [0.802, 0.692, 0.615, 0.658, 0.731, ]

label 1 : [0.702, 0.948, 0.726, 0.715, 0.678, ]

label 2 : [0.601, 0.755, 0.944, 0.754, 0.676, ]

label -1 : [0.819, 0.714, 0.647, 0.730, 0.719, ]

label 1 : [0.677, 0.941, 0.718, 0.706, 0.692, ]

label -1 : [0.823, 0.684, 0.640, 0.672, 0.744, ]

label -1 : [0.648, 0.847, 0.697, 0.666, 0.681, ]

label 2 : [0.592, 0.757, 0.965, 0.757, 0.697, ]

label 5 : [0.753, 0.692, 0.683, 0.684, 0.925, ]

label -1 : [0.648, 0.762, 0.662, 0.664, 0.762, ]

label 0 : [0.956, 0.696, 0.640, 0.629, 0.703, ]

label -1 : [0.811, 0.688, 0.637, 0.671, 0.753, ]

label 1 : [0.678, 0.944, 0.702, 0.689, 0.690, ]

label 1 : [0.656, 0.949, 0.684, 0.703, 0.679, ]

label -1 : [0.561, 0.841, 0.807, 0.802, 0.845, ]

label -1 : [0.791, 0.805, 0.721, 0.730, 0.730, ]

label -1 : [0.846, 0.798, 0.613, 0.641, 0.669, ]

label -1 : [0.797, 0.786, 0.683, 0.681, 0.721, ]

label -1 : [0.849, 0.748, 0.700, 0.676, 0.739, ]

label -1 : [0.867, 0.731, 0.655, 0.648, 0.766, ]

label -1 : [0.874, 0.761, 0.672, 0.641, 0.717, ]

label -1 : [0.833, 0.817, 0.648, 0.674, 0.684, ]

label -1 : [0.908, 0.825, 0.694, 0.703, 0.659, ]

label -1 : [0.950, 0.822, 0.667, 0.693, 0.710, ]

label -1 : [0.894, 0.812, 0.611, 0.679, 0.680, ]

label -1 : [0.854, 0.730, 0.641, 0.644, 0.737, ]

label -1 : [0.894, 0.801, 0.696, 0.696, 0.714, ]

label -1 : [0.806, 0.769, 0.694, 0.658, 0.679, ]

label -1 : [0.908, 0.816, 0.677, 0.696, 0.766, ]

label -1 : [0.700, 0.706, 0.656, 0.650, 0.686, ]

label -1 : [0.804, 0.709, 0.707, 0.770, 0.848, ]

label -1 : [0.749, 0.721, 0.664, 0.695, 0.740, ]

label 1 : [0.706, 0.948, 0.771, 0.721, 0.749, ]

label 1 : [0.684, 0.959, 0.769, 0.728, 0.740, ]

label 1 : [0.658, 0.955, 0.807, 0.763, 0.778, ]

label 1 : [0.641, 0.958, 0.837, 0.798, 0.790, ]

label 1 : [0.512, 0.966, 0.874, 0.829, 0.841, ]

label 1 : [0.696, 0.948, 0.776, 0.726, 0.749, ]

label 1 : [0.684, 0.950, 0.773, 0.722, 0.742, ]

label -1 : [0.502, 0.738, 0.779, 0.647, 0.712, ]

label 5 : [0.731, 0.688, 0.678, 0.792, 0.920, ]

label 0 : [0.931, 0.718, 0.646, 0.630, 0.701, ]

label 2 : [0.652, 0.753, 0.936, 0.755, 0.659, ]

label 5 : [0.738, 0.712, 0.653, 0.688, 0.913, ]

label 0 : [0.904, 0.825, 0.639, 0.635, 0.716, ]

label 2 : [0.653, 0.744, 0.935, 0.749, 0.660, ]

label 1 : [0.639, 0.950, 0.806, 0.758, 0.779, ]

label 2 : [0.640, 0.760, 0.930, 0.750, 0.674, ]

label 2 : [0.632, 0.763, 0.933, 0.751, 0.670, ]

label -1 : [0.639, 0.866, 0.668, 0.667, 0.713, ]

label -1 : [0.593, 0.783, 0.596, 0.620, 0.762, ]

label -1 : [0.524, 0.772, 0.683, 0.692, 0.714, ]

label 1 : [0.698, 0.948, 0.756, 0.710, 0.746, ]

label 1 : [0.765, 0.947, 0.749, 0.705, 0.716, ]

label 1 : [0.667, 0.950, 0.791, 0.732, 0.762, ]

label -1 : [0.826, 0.729, 0.671, 0.645, 0.703, ]

label -1 : [0.827, 0.738, 0.665, 0.650, 0.723, ]

label 2 : [0.628, 0.794, 0.939, 0.756, 0.670, ]

label 0 : [0.812, 0.814, 0.643, 0.620, 0.702, ]

label 1 : [0.556, 0.970, 0.867, 0.825, 0.738, ]

label 5 : [0.639, 0.775, 0.670, 0.784, 0.964, ]

label -1 : [0.473, 0.880, 0.593, 0.620, 0.661, ]

label 1 : [0.659, 0.959, 0.840, 0.745, 0.738, ]

label 1 : [0.770, 0.939, 0.776, 0.718, 0.738, ]

label 1 : [0.704, 0.951, 0.778, 0.726, 0.737, ]

label 1 : [0.713, 0.949, 0.784, 0.748, 0.733, ]

label 1 : [0.637, 0.951, 0.816, 0.756, 0.723, ]

label 1 : [0.701, 0.945, 0.831, 0.750, 0.752, ]

label 1 : [0.511, 0.955, 0.867, 0.829, 0.835, ]

label 1 : [0.708, 0.930, 0.788, 0.743, 0.734, ]

label 1 : [0.674, 0.951, 0.804, 0.724, 0.743, ]

label -1 : [0.909, 0.806, 0.682, 0.671, 0.690, ]

label 5 : [0.624, 0.674, 0.638, 0.662, 0.919, ]

label 1 : [0.636, 0.946, 0.806, 0.763, 0.733, ]

label 0 : [0.840, 0.820, 0.620, 0.627, 0.713, ]

label 5 : [0.710, 0.685, 0.683, 0.681, 0.912, ]

label 1 : [0.722, 0.942, 0.823, 0.780, 0.737, ]

label 2 : [0.633, 0.760, 0.939, 0.767, 0.675, ]

label 0 : [0.925, 0.727, 0.645, 0.649, 0.690, ]

label 5 : [0.780, 0.722, 0.641, 0.661, 0.931, ]

label 0 : [0.932, 0.750, 0.656, 0.633, 0.716, ]

label 2 : [0.653, 0.723, 0.938, 0.762, 0.641, ]

label 1 : [0.667, 0.917, 0.815, 0.761, 0.734, ]

label 2 : [0.693, 0.757, 0.922, 0.744, 0.687, ]

label 2 : [0.675, 0.738, 0.931, 0.753, 0.655, ]

label -1 : [0.581, 0.792, 0.594, 0.588, 0.699, ]

label -1 : [0.578, 0.823, 0.707, 0.668, 0.700, ]

label 1 : [0.704, 0.957, 0.713, 0.668, 0.743, ]

label 1 : [0.784, 0.927, 0.759, 0.718, 0.705, ]

label 1 : [0.728, 0.948, 0.756, 0.704, 0.751, ]

label -1 : [0.748, 0.825, 0.723, 0.730, 0.782, ]

label 0 : [0.887, 0.816, 0.638, 0.672, 0.681, ]

label 1 : [0.506, 0.960, 0.864, 0.842, 0.852, ]

label 5 : [0.704, 0.795, 0.679, 0.799, 0.926, ]

label 2 : [0.588, 0.763, 0.923, 0.752, 0.676, ]

label -1 : [0.507, 0.676, 0.625, 0.583, 0.567, ]

label 1 : [0.563, 0.922, 0.856, 0.820, 0.832, ]

label 1 : [0.727, 0.938, 0.820, 0.791, 0.779, ]

label 1 : [0.748, 0.937, 0.736, 0.714, 0.754, ]

label 1 : [0.751, 0.937, 0.763, 0.728, 0.775, ]

label 5 : [0.608, 0.700, 0.751, 0.711, 0.911, ]

label 1 : [0.729, 0.879, 0.789, 0.756, 0.684, ]

label 0 : [0.786, 0.820, 0.623, 0.615, 0.703, ]

label 5 : [0.677, 0.712, 0.638, 0.816, 0.885, ]

label 0 : [0.881, 0.804, 0.612, 0.624, 0.705, ]

label 2 : [0.615, 0.789, 0.944, 0.771, 0.665, ]

label 1 : [0.605, 0.951, 0.846, 0.807, 0.809, ]

label 5 : [0.706, 0.694, 0.668, 0.698, 0.912, ]

label 0 : [0.929, 0.736, 0.664, 0.646, 0.695, ]

label 2 : [0.625, 0.736, 0.918, 0.763, 0.659, ]

label 2 : [0.674, 0.736, 0.919, 0.777, 0.657, ]

label 2 : [0.672, 0.740, 0.930, 0.746, 0.667, ]

label 1 : [0.706, 0.941, 0.765, 0.729, 0.751, ]

label -1 : [0.566, 0.826, 0.604, 0.581, 0.680, ]

label -1 : [0.587, 0.828, 0.695, 0.674, 0.676, ]

label 1 : [0.758, 0.884, 0.736, 0.702, 0.679, ]

label 1 : [0.784, 0.925, 0.756, 0.736, 0.742, ]

label 1 : [0.750, 0.933, 0.714, 0.690, 0.746, ]

label 1 : [0.709, 0.925, 0.799, 0.764, 0.711, ]

label 1 : [0.567, 0.959, 0.852, 0.809, 0.740, ]

label 1 : [0.578, 0.938, 0.850, 0.814, 0.822, ]

label 1 : [0.767, 0.938, 0.717, 0.696, 0.772, ]

label 1 : [0.727, 0.935, 0.768, 0.739, 0.744, ]

label -1 : [0.851, 0.864, 0.698, 0.668, 0.646, ]

label -1 : [0.811, 0.783, 0.728, 0.724, 0.762, ]

label -1 : [0.960, 0.817, 0.667, 0.725, 0.724, ]

label -1 : [0.818, 0.827, 0.724, 0.692, 0.776, ]

label -1 : [0.796, 0.750, 0.694, 0.659, 0.664, ]

label -1 : [0.872, 0.776, 0.711, 0.697, 0.678, ]

label 1 : [0.755, 0.939, 0.801, 0.753, 0.738, ]

label 2 : [0.664, 0.759, 0.933, 0.752, 0.686, ]

label 2 : [0.645, 0.762, 0.933, 0.756, 0.683, ]

label 2 : [0.669, 0.760, 0.934, 0.752, 0.686, ]

label 2 : [0.640, 0.794, 0.932, 0.764, 0.669, ]

label 1 : [0.726, 0.946, 0.722, 0.670, 0.716, ]

label 2 : [0.654, 0.742, 0.931, 0.767, 0.680, ]

label 2 : [0.606, 0.841, 0.929, 0.750, 0.658, ]

label 2 : [0.587, 0.841, 0.922, 0.766, 0.691, ]

label 1 : [0.680, 0.941, 0.838, 0.783, 0.784, ]

label -1 : [0.557, 0.829, 0.675, 0.598, 0.679, ]

label -1 : [0.509, 0.771, 0.691, 0.645, 0.692, ]

label -1 : [0.518, 0.814, 0.723, 0.639, 0.694, ]

label 2 : [0.637, 0.800, 0.935, 0.765, 0.670, ]

label 1 : [0.736, 0.926, 0.743, 0.700, 0.743, ]

label 2 : [0.658, 0.780, 0.949, 0.760, 0.672, ]

label 1 : [0.738, 0.936, 0.825, 0.778, 0.768, ]

label 1 : [0.728, 0.938, 0.718, 0.671, 0.730, ]

SVM学習実施

SVMの学習を実施します。

結果が良ければ、学習したSVMモデルを保存、今後使用します。

30番の輪郭は輪郭検出がうまくいっていないのが分かっているので削除しておきます。

svm_inputs = copy.deepcopy(similarities_all) svm_labels = copy.deepcopy(labels_all) # Remove inadequate contour data in img1 del svm_inputs[30] del svm_labels[30] train_data, train_labels = get_random_sample(svm_inputs, svm_labels, [-1,0,1,2,3,5], 20, seed=123) svm = prepare_svm(train_data, train_labels)

SVM推論実施

学習したSVMモデルの性能を確認。

result = svm.predict(np.array(svm_inputs, 'float32'))

print_stat(result, svm_labels)

label -1 : {-1: 73, 0: 13, 1: 0, 2: 0, 3: 0, 5: 3, }

label 0 : {-1: 5, 0: 22, 1: 0, 2: 0, 3: 0, 5: 0, }

label 1 : {-1: 0, 0: 0, 1: 78, 2: 0, 3: 0, 5: 0, }

label 2 : {-1: 0, 0: 0, 1: 0, 2: 39, 3: 0, 5: 0, }

label 3 : {-1: 0, 0: 0, 1: 0, 2: 0, 3: 2, 5: 0, }

label 5 : {-1: 0, 0: 0, 1: 0, 2: 0, 3: 0, 5: 29, }

"0"以外の数字の輪郭であれば全て正解していますが、非数字の輪郭で判定の失敗がいくつかあります。

判定に失敗した輪郭を確認します。

subimgs = copy.deepcopy(subimgs_all) subctrs = copy.deepcopy(subctrs_all) del subimgs[30] del subctrs[30] for i,(sims,lab,res,img,ctr) in enumerate(zip(svm_inputs, svm_labels, result[1], subimgs, subctrs)): if lab != res[0]: print('No.', i) print('{: }'.format(lab), ' -> ', '{: d}'.format(int(res[0])), ' [',end='') for s in sims: print('{:.3f}, '.format(s), end=''); print(']') img = cv2.drawContours(img, [ctr], -1, (0,255,0), 1) plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB)),plt.xticks([]),plt.yticks([]) plt.show()

No. 16

-1 -> 5 [0.796, 0.672, 0.715, 0.783, 0.878, ]

No. 37

-1 -> 0 [0.840, 0.879, 0.677, 0.662, 0.765, ]

No. 52

-1 -> 5 [0.780, 0.648, 0.735, 0.787, 0.825, ]

No. 68

0 -> -1 [0.827, 0.785, 0.669, 0.627, 0.743, ]

No. 76

-1 -> 0 [0.942, 0.719, 0.693, 0.695, 0.736, ]

No. 78

0 -> -1 [0.845, 0.789, 0.660, 0.674, 0.745, ]

No. 94

0 -> -1 [0.843, 0.753, 0.688, 0.635, 0.697, ]

No. 133

-1 -> 0 [0.846, 0.798, 0.613, 0.641, 0.669, ]

No. 136

-1 -> 0 [0.867, 0.731, 0.655, 0.648, 0.766, ]

No. 137

-1 -> 0 [0.874, 0.761, 0.672, 0.641, 0.717, ]

No. 139

-1 -> 0 [0.908, 0.825, 0.694, 0.703, 0.659, ]

No. 140

-1 -> 0 [0.950, 0.822, 0.667, 0.693, 0.710, ]

No. 141

-1 -> 0 [0.894, 0.812, 0.611, 0.679, 0.680, ]

No. 142

-1 -> 0 [0.854, 0.730, 0.641, 0.644, 0.737, ]

No. 143

-1 -> 0 [0.894, 0.801, 0.696, 0.696, 0.714, ]

No. 145

-1 -> 0 [0.908, 0.816, 0.677, 0.696, 0.766, ]

No. 147

-1 -> 5 [0.804, 0.709, 0.707, 0.770, 0.848, ]

No. 175

0 -> -1 [0.812, 0.814, 0.643, 0.620, 0.702, ]

No. 188

-1 -> 0 [0.909, 0.806, 0.682, 0.671, 0.690, ]

No. 219

0 -> -1 [0.786, 0.820, 0.623, 0.615, 0.703, ]

No. 242

-1 -> 0 [0.960, 0.817, 0.667, 0.725, 0.724, ]

SVM再学習

判定に失敗した輪郭データの一部を学習データに加えます。

点数文字でない"5"が3つ見られますが、このうち2つを追加します。

その後再度判定を行ってみます。

train_data += [svm_inputs[16], svm_inputs[52]] train_labels += [svm_labels[16], svm_labels[52]] svm = prepare_svm(train_data, train_labels) result = svm.predict(np.array(svm_inputs, 'float32')) print_stat(result, svm_labels)

label -1 : {-1: 75, 0: 13, 1: 0, 2: 0, 3: 0, 5: 1, }

label 0 : {-1: 5, 0: 22, 1: 0, 2: 0, 3: 0, 5: 0, }

label 1 : {-1: 0, 0: 0, 1: 78, 2: 0, 3: 0, 5: 0, }

label 2 : {-1: 0, 0: 0, 1: 0, 2: 39, 3: 0, 5: 0, }

label 3 : {-1: 0, 0: 0, 1: 0, 2: 0, 3: 2, 5: 0, }

label 5 : {-1: 0, 0: 0, 1: 0, 2: 0, 3: 0, 5: 29, }

"5"の文字を全て正しく判別できるかと期待したが、そうはならず。

このまま進めてしまうこととします。

データ化

ここまでで得られたテンプレートデータとSVMモデルデータを保存し、点数計算アプリで使用したい。

SVMモデルの保存

SVMは保存するメソッドがあったので、それを使用します。

テンプレートデータについては、pythonでのオブジェクト保存の方法を調べるとpickle、jsonを使った2パターンが出てきます。

pickleでは、出所の分からないデータの読み込みを行うと意図しないコードを実行されてしまう、という脆弱性があるようですが、今回は自分で用意したデータを使いたいだけなので、一応問題はないかなと。

ただ、jsonを知っておいたほうが今後のためになりそうなので、jsonを使います。

Python公式 pickleドキュメント:

https://docs.python.org/ja/3/library/pickle.html

https://docs.python.org/ja/3/library/json.html

https://hibiki-press.tech/python/json/1633

https://note.nkmk.me/python-json-load-dump/

svm.save('harupan_data/harupan_svm.dat')

生成されたデータの中身はこんな感じ。

テキストで、設定した内容も含めてSVMの情報が全部入っています。

%YAML:1.0

---

opencv_ml_svm:

format: 3

svmType: C_SVC

kernel:

type: LINEAR

C: 100.

term_criteria: { epsilon:1.1920928955078125e-07, iterations:1000 }

var_count: 5

class_count: 6

class_labels: !!opencv-matrix

rows: 6

cols: 1

dt: i

data: [ -1, 0, 1, 2, 3, 5 ]

sv_total: 15

support_vectors:

- [ -1.80685959e+01, -5.69025517e+00, 1.04596977e+01,

7.75204945e+00, -2.17070246e+00 ]

- [ 1.23291440e-01, -1.82865601e+01, -6.46431494e+00,

4.22870070e-01, 6.76044846e+00 ]

...

- [ -2.71152169e-01, -2.04700381e-01, 1.73537850e+00,

3.85429978e+00, -5.29199266e+00 ]

uncompressed_sv_total: 38

uncompressed_support_vectors:

- [ 8.49453330e-01, 7.48484850e-01, 6.99999988e-01, 6.75757587e-01,

7.39393950e-01 ]

- [ 8.43867898e-01, 7.64705896e-01, 6.90823317e-01, 7.08056450e-01,

7.38181829e-01 ]

...

- [ 7.79716969e-01, 6.48148119e-01, 7.35420227e-01, 7.86544859e-01,

8.25454533e-01 ]

decision_functions:

-

sv_count: 1

rho: -9.4865848064106029e+00

alpha: [ 1. ]

index: [ 0 ]

-

sv_count: 1

rho: -1.5486015742808821e+01

alpha: [ 1. ]

index: [ 1 ]

-

sv_count: 1

rho: 5.0411393634237656e-01

alpha: [ 1. ]

index: [ 2 ]

...

-

sv_count: 1

rho: 2.3617392745496142e+00

alpha: [ 1. ]

index: [ 13 ]

-

sv_count: 1

rho: 1.9971712154702148e-01

alpha: [ 1. ]

index: [ 14 ]

保存データを読み込んで確認してみます。

Pythonでのモデル読み込みは以下を参照。

https://qiita.com/color_box/items/6f7d06fc3d65c6913ebf

svm_restored = cv2.ml.SVM_load('harupan_data/harupan_svm.dat') result = svm_restored.predict(np.array(svm_inputs, 'float32')) print_stat(result, svm_labels)

label -1 : {-1: 75, 0: 13, 1: 0, 2: 0, 3: 0, 5: 1, }

label 0 : {-1: 5, 0: 22, 1: 0, 2: 0, 3: 0, 5: 0, }

label 1 : {-1: 0, 0: 0, 1: 78, 2: 0, 3: 0, 5: 0, }

label 2 : {-1: 0, 0: 0, 1: 0, 2: 39, 3: 0, 5: 0, }

label 3 : {-1: 0, 0: 0, 1: 0, 2: 0, 3: 2, 5: 0, }

label 5 : {-1: 0, 0: 0, 1: 0, 2: 0, 3: 0, 5: 29, }

前と同じ結果が出ているので、問題なし。

テンプレートデータの保存(json)

テンプレートデータのjson保存のほうは、ndarrayがそのままでは扱えなかったので、リストに一回変換してから保存します。

読み込みのときに、ndarrayに戻します。

また、辞書データはjson形式と相性がいいようなので、これも組み合わせ。

https://wtnvenga.hatenablog.com/entry/2018/05/27/113848

https://note.nkmk.me/python-numpy-list/

テンプレートデータは、輪郭データと選択点リストを保存しておくこととします。

下準備の段階で、これらのデータを上に書いたクラスに与えてインスタンスを生成します。

import json # ctr_list: List of contours for (0, 1, 2, 3, 5) # pts_idx_list: List of selected point indices for (1, 2, 3, 5) def save_templates(filename, ctr_list, pts_idx_list): with open(filename, mode='w') as f: save_data = [] save_data += [{'num': 0, 'ctr': ctr_list[0].tolist(), 'pts': [0]}] for num, ctr, pts_idx in zip([1,2,3,5], ctr_list[1:5], pts_idx_list): save_data += [{'num': num, 'ctr': ctr.tolist(), 'pts': pts_idx}] json.dump(save_data, f, indent=2) return def load_templates(filename): with open(filename, mode='r') as f: load_data = json.load(f) templates_rtn = [] for d in load_data: templates_rtn += [template_dataset(np.array(d['ctr']), d['num'], d['pts'])] return templates_rtn

save_templates('harupan_data/templates2019.json', subctrs1, subctrs1_selected_pts) save_templates('harupan_data/templates2020.json', subctrs3, subctrs3_selected_pts) save_templates('harupan_data/templates2021.json', subctrs5, subctrs5_selected_pts)

生成されたデータは以下のような形。

[

{

"num": 0,

"ctr": [

[

[

18,

52

]

],

[

[

16,

52

]

],

[

[

15,

52

]

],

...

[

[

19,

52

]

],

[

[

19,

52

]

]

],

"pts": [

0

]

},

{

"num": 1,

"ctr": [

[

[

0,

2

]

],

[

[

1,

0

]

],

[

[

1,

0

]

],

...

[

[

0,

4

]

],

[

[

0,

3

]

]

],

"pts": [

0,

5,

10,

15,

20,

25,

30,

35,

40,

45,

50,

55,

60,

65,

70,

75,

80,

85,

90,

95,

100,

105,

110,

115,

120,

125,

130,

135,

140

]

},

{

"num": 2,

"ctr": [

[

[

12,

1

]

],

[

[

13,

0

]

],

...

分かりやすくはないですが、直接見たいわけではないので特に問題なし。

テキスト保存なので無駄にデータ量が多くなりますが…。

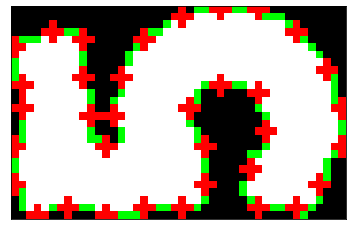

きちんと復元できることも確認。

templates1_restored = load_templates('harupan_data/templates2019.json') templates3_restored = load_templates('harupan_data/templates2020.json') templates5_restored = load_templates('harupan_data/templates2021.json') def disp_template(template): img = cv2.cvtColor(template.solid.copy(), cv2.COLOR_GRAY2RGB) if template.num != 0: img = cv2.drawContours(img, [template.ctr], -1, (0,255,0), 1) for p in template.pts: img = cv2.drawMarker(img, p, (255,0,0), markerType=cv2.MARKER_CROSS, markerSize=3) plt.imshow(img), plt.xticks([]), plt.yticks([]) plt.show() print('Template 2019') for t in templates1_restored: disp_template(t) print('Template 2020') for t in templates3_restored: disp_template(t) print('Template 2021') for t in templates5_restored: disp_template(t)

Template 2019

Template 2020

Template 2021

大丈夫そう。

スクリプト化

今までの処理を、pythonスクリプトとして保存、これを読み込めば必要な関数がロードされるようにしておきたいなと。

以下のスクリプト(harupan.py)を作成。

これでJupyter notebookがすっきりするか。

###################################################### # Importing libraries ###################################################### import cv2 import numpy as np from matplotlib import pyplot as plt import math import copy import random import json ###################################################### # Detecting contours ###################################################### def detect_candidate_contours(image, res_th=800): h, w, chs = image.shape if h > res_th or w > res_th: k = float(res_th)/h if w > h else float(res_th)/w else: k = 1.0 img = cv2.resize(image, None, fx=k, fy=k, interpolation=cv2.INTER_AREA) hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV) # Convert hue value (rotation, mask by saturation) hsv[:,:,0] = np.where(hsv[:,:,0] < 50, hsv[:,:,0]+180, hsv[:,:,0]) hsv[:,:,0] = np.where(hsv[:,:,1] < 100, 0, hsv[:,:,0]) # Thresholding with cv2.inRange() th_hue = cv2.inRange(hsv[:,:,0], 135, 190) # Retrieve all points on the contours (cv2.CHAIN_APPROX_NONE) contours, hierarchy = cv2.findContours(th_hue, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE) indices0 = [i for i,hier in enumerate(hierarchy[0,:,:]) if hier[3] == -1] indices1 = [i for i,hier in enumerate(hierarchy[0,:,:]) if hier[3] in indices0] contours1 = [contours[i] for i in indices1] contours1_filtered = [ctr for ctr in contours1 if cv2.contourArea(ctr) > float(res_th)*float(res_th)/4000] return contours1_filtered, img ###################################################### # Auxiliary functions ###################################################### def create_contour_area_image(img, ctr): x,y,w,h = cv2.boundingRect(ctr) rtn_img = img[y:y+h,x:x+w,:].copy() rtn_ctr = ctr.copy() origin = np.array([x,y]) for c in rtn_ctr: c[0,:] -= origin return rtn_img, rtn_ctr # ctr: Should be output of create_contour_area_image() (Origin of points is the origin of bounding box) # img_shape: Optional, tuple of (image_height, image_width), if omitted, calculated from ctr def create_solid_contour(ctr, img_shape=(int(0),int(0))): if img_shape == (int(0),int(0)): _,_,w,h = cv2.boundingRect(ctr) else: h,w = img_shape img = np.zeros((h,w), 'uint8') img = cv2.drawContours(img, [ctr], -1, 255, -1) return img # ctr: Should be output of create_contour_area_image() (Origin of points is the origin of bounding box) def create_upright_solid_contour(ctr): (cx,cy),(w,h),angle = cv2.minAreaRect(ctr) M = cv2.getRotationMatrix2D((cx,cy), angle, 1) for i in range(ctr.shape[0]): ctr[i,0,:] = ( M @ np.array([ctr[i,0,0], ctr[i,0,1], 1]) ).astype('int') rect = cv2.boundingRect(ctr) img = np.zeros((rect[3],rect[2]), 'uint8') ctr -= rect[0:2] M[:,2] -= rect[0:2] img = cv2.drawContours(img, [ctr], -1, 255,-1) return img, M, ctr ###################################################### # Dataset classes ###################################################### class contour_dataset: def __init__(self, ctr): self.ctr = ctr.copy() self.rrect = cv2.minAreaRect(ctr) self.box = cv2.boxPoints(self.rrect) self.solid = create_solid_contour(ctr) self.pts = np.array([p for p in ctr[:,0,:]]) class template_dataset: def __init__(self, ctr, num, selected_idx=[0]): self.ctr = ctr.copy() self.num = num self.rrect = cv2.minAreaRect(ctr) self.box = cv2.boxPoints(self.rrect) if num == 0: self.solid,_,_ = create_upright_solid_contour(ctr) else: self.solid = create_solid_contour(ctr) self.pts = np.array([ctr[idx,0,:] for idx in selected_idx]) ###################################################### # ICP ###################################################### # pts: list of 2D points, or ndarray of shape (n,2) # query: 2D point to find nearest neighbor def find_nearest_neighbor(pts, query): min_distance_sq = float('inf') min_idx = 0 for i, p in enumerate(pts): d = np.dot(query - p, query - p) if(d < min_distance_sq): min_distance_sq = d min_idx = i return min_idx, np.sqrt(min_distance_sq) # src, dst: ndarray, shape is (n,2) (n: number of points) def estimate_affine_2d(src, dst): n = min(src.shape[0], dst.shape[0]) x = dst[0:n].flatten() A = np.zeros((2*n,6)) for i in range(n): A[i*2,0] = src[i,0] A[i*2,1] = src[i,1] A[i*2,2] = 1 A[i*2+1,3] = src[i,0] A[i*2+1,4] = src[i,1] A[i*2+1,5] = 1 M = np.linalg.inv(A.T @ A) @ A.T @ x return M.reshape([2,3]) # Find optimum affine matrix using ICP algorithm # src_pts: ndarray, shape is (n_s,2) (n_s: number of points) # dst_pts: ndarray, shape is (n_d,2) (n_d: number of points, n_d should be larger or equal to n_s) # initial_matrix: ndarray, shape is (2,3) def icp(src_pts, dst_pts, max_iter=20, initial_matrix=np.array([[1.0, 0.0, 0.0], [0.0, 1.0, 0.0]])): default_affine_matrix = np.array([[1.0, 0.0, 0.0], [0.0, 1.0, 0.0]]) if dst_pts.shape[0] < src_pts.shape[0]: print("icp: Insufficient destination points") return default_affine_matrix, False if initial_matrix.shape != (2,3): print("icp: Illegal shape of initial_matrix") return default_affine_matrix, False M = initial_matrix # Store indices of the nearest neighbor point of dst_pts to the converted point of src_pts nn_idx = [] for i in range(max_iter): nn_idx_tmp = [] dst_pts_list = [p for p in dst_pts] idx_list = list(range(0,dst_pts.shape[0])) for p in src_pts: p2 = M @ np.array([p[0], p[1], 1]) idx, d = find_nearest_neighbor(dst_pts_list, p2) nn_idx_tmp += [idx_list[idx]] del dst_pts_list[idx] del idx_list[idx] if nn_idx != [] and nn_idx == nn_idx_tmp: break dst_pts2 = np.zeros_like(src_pts) for j,idx in enumerate(nn_idx_tmp): dst_pts2[j,:] = dst_pts[idx,:] M = estimate_affine_2d(src_pts, dst_pts2) nn_idx = nn_idx_tmp if i == max_iter -1: return M, False return M, True ###################################################### # Calculating similarity and determining the number ###################################################### def binary_image_similarity(img1, img2): if img1.shape != img2.shape: print('binary_image_similarity: Different image size') return 0.0 xor_img = cv2.bitwise_xor(img1, img2) return 1.0 - np.float(np.count_nonzero(xor_img)) / (img1.shape[0]*img2.shape[1]) # src, dst: contour_dataset or template_dataset (holding member variables box, solid) def get_transform_by_rotated_rectangle(src, dst): # Rotated patterns are created when starting index is slided dst_box2 = np.vstack([dst.box, dst.box]) max_similarity = 0.0 max_converted_img = np.zeros((dst.solid.shape[1], dst.solid.shape[0]), 'uint8') for i in range(4): M = cv2.getAffineTransform(src.box[0:3], dst_box2[i:i+3]) converted_img = cv2.warpAffine(src.solid, M, dsize=(dst.solid.shape[1], dst.solid.shape[0]), flags=cv2.INTER_NEAREST) similarity = binary_image_similarity(converted_img, dst.solid) if similarity > max_similarity: M_rtn = M max_similarity = similarity max_converted_img = converted_img return M_rtn, max_similarity, max_converted_img def get_similarity_with_template(target_data, template_data, sim_th_high=0.95, sim_th_low=0.7): _,(w1,h1), _ = target_data.rrect _,(w2,h2), _ = template_data.rrect r = w1/h1 if w1 < h1 else h1/w1 r = r * h2/w2 if w2 < h2 else r * w2/h2 M, sim_init, _ = get_transform_by_rotated_rectangle(template_data, target_data) if sim_init > sim_th_high or sim_init < sim_th_low or r > 1.4 or r < 0.7: dsize = (template_data.solid.shape[1], template_data.solid.shape[0]) flags = cv2.INTER_NEAREST|cv2.WARP_INVERSE_MAP converted_img = cv2.warpAffine(target_data.solid, M, dsize=dsize, flags=flags) return sim_init, converted_img M, _ = icp(template_data.pts, target_data.pts, initial_matrix=M) Minv = cv2.invertAffineTransform(M) converted_ctr = np.zeros_like(target_data.ctr) for i in range(target_data.ctr.shape[0]): converted_ctr[i,0,:] = (Minv[:,0:2] @ target_data.ctr[i,0,:]) + Minv[:,2] converted_img = create_solid_contour(converted_ctr, img_shape=template_data.solid.shape) val = binary_image_similarity(converted_img, template_data.solid) return val, converted_img def get_similarity_with_template_zero(target_data, template_data): dsize = (template_data.solid.shape[1], template_data.solid.shape[0]) converted_img = cv2.resize(target_data.solid, dsize=dsize, interpolation=cv2.INTER_NEAREST) val = binary_image_similarity(converted_img, template_data.solid) return val, converted_img def get_similarities(target, templates): similarities = [] converted_imgs = [] for tmpl in templates: if tmpl.num == 0: sim,converted_img = get_similarity_with_template_zero(target, tmpl) else: sim,converted_img = get_similarity_with_template(target, tmpl) similarities += [sim] converted_imgs += [converted_img] return similarities, converted_imgs # target: Single contour to compare # templates: List of template_dataset (for numbers 0, 1, 2, 3, 5) # svm: Trained SVM # return: determined number (0,1,2,3,5), -1 if none corresponds def determine_number(target, templates, svm): similarities,_ = get_similarities(target, templates) _, result = svm.predict(np.array(similarities)) return int(result[0]) ###################################################### # Loading template data and SVM model ###################################################### def load_svm(filename): return cv2.ml.SVM_load(filename) def load_templates(filename): with open(filename, mode='r') as f: load_data = json.load(f) templates_rtn = [] for d in load_data: templates_rtn += [template_dataset(np.array(d['ctr']), d['num'], d['pts'])] return templates_rtn

ここまで

春のパン祭りシール点数集計ではまだやらないといけないことがあって、

- 点数計算の処理

- 1枚の入力画像を受けてから点数を計算するまでの一連の流れ

というところですが、また次回にします。