Google がGenAI Processorsというものを出したそうで、自分で生成AI使ったアプリ作れるそうなので、試してみる。

このページも参考に。

GenAI Processorsで遊ぼう!コピペで試せるAIアプリ4選(PDF要約・翻訳・画像生成も!?) #Python - Qiita

まずAPIキーを作成しておく必要がある。

Google AI Studioから発行する。

Get API key | Google AI Studio

無料枠あるそうなのでそれでやってく

どれくらいある?

Gemini API無料|制限・料金・APIキー取得方法を図解 | Hakky Handbook

ひとまず、軽いモデル(Gemini 2.0 Flash-Lite)なら一日1500リクエスト投げて大丈夫そう

| モデル |

リクエスト数 (RPM) |

リクエスト数 (RPD) |

トークン数 (トークン/分) |

| Gemini 2.5 Pro Experimental |

5 |

- |

1,000,000 |

| Gemini 2.0 Flash |

15 |

- |

1,000,000 |

| Gemini 2.0 Flash-Lite |

- |

1,500 |

1,000,000 |

| Gemma 3 |

- |

14,400 |

15,000 |

あとは無料にしてもクレジットカード登録必要か?と思ったが、ここには特に書かれてないので、これで進めてみる

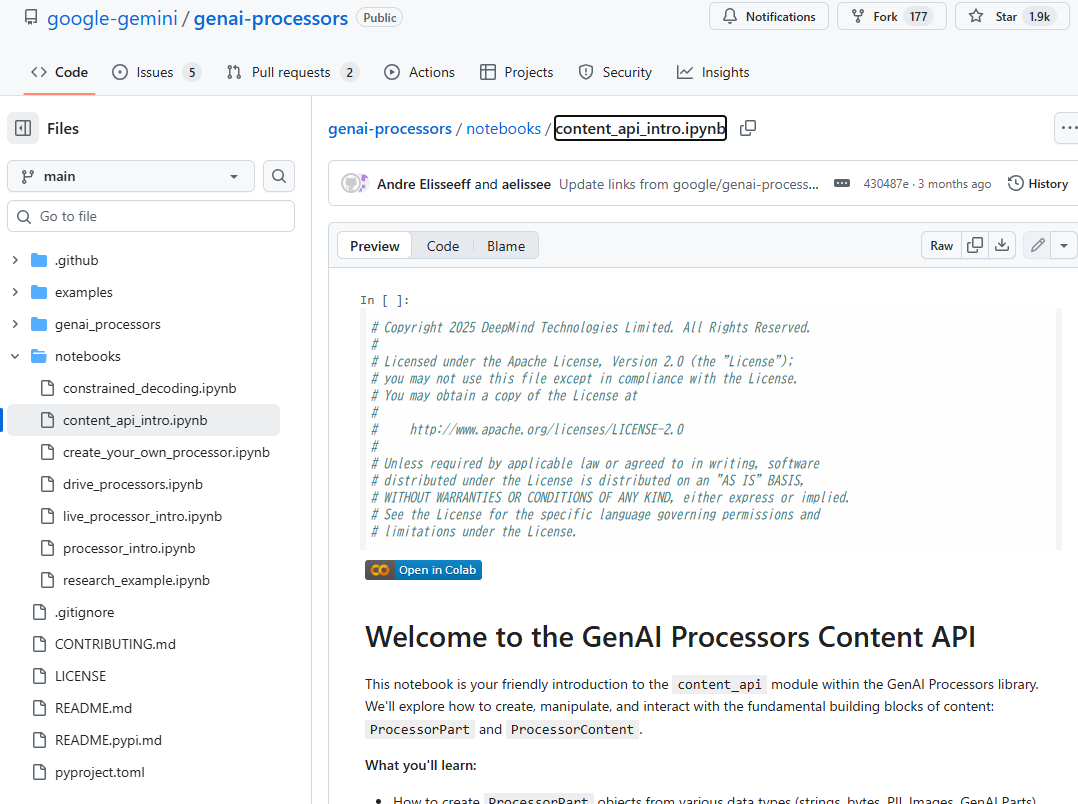

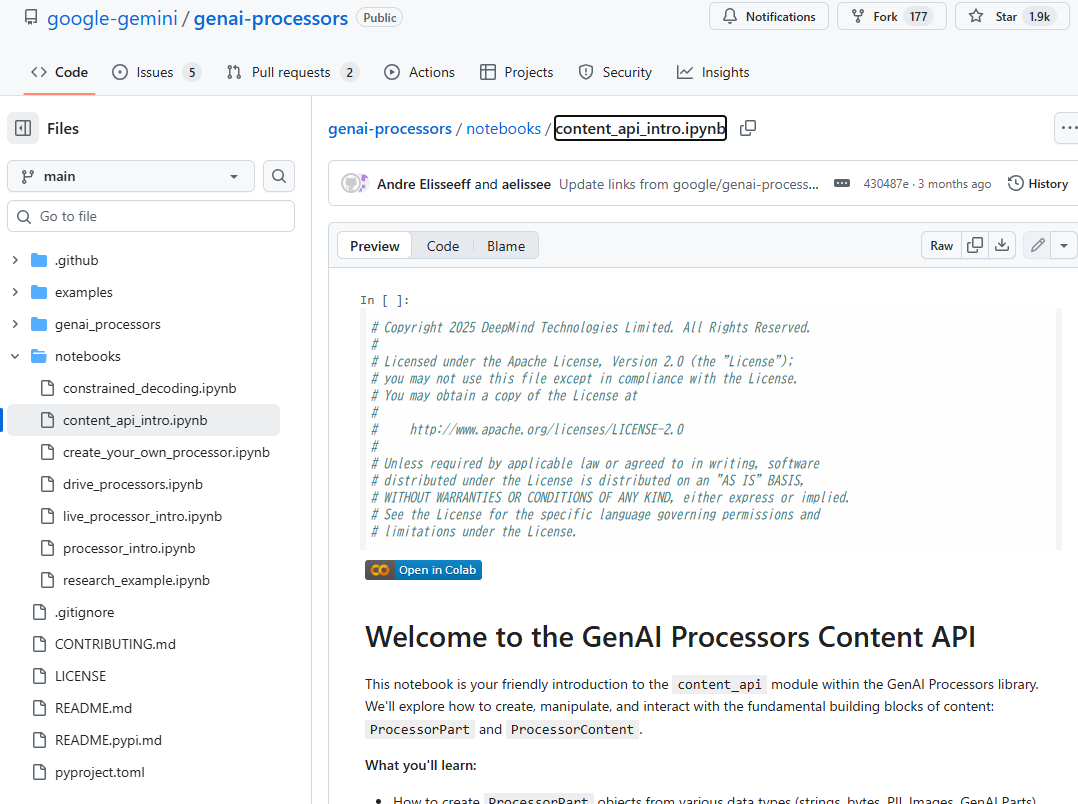

GitHub - google-gemini/genai-processors: GenAI Processors is a lightweight Python library that enables efficient, parallel content processing.

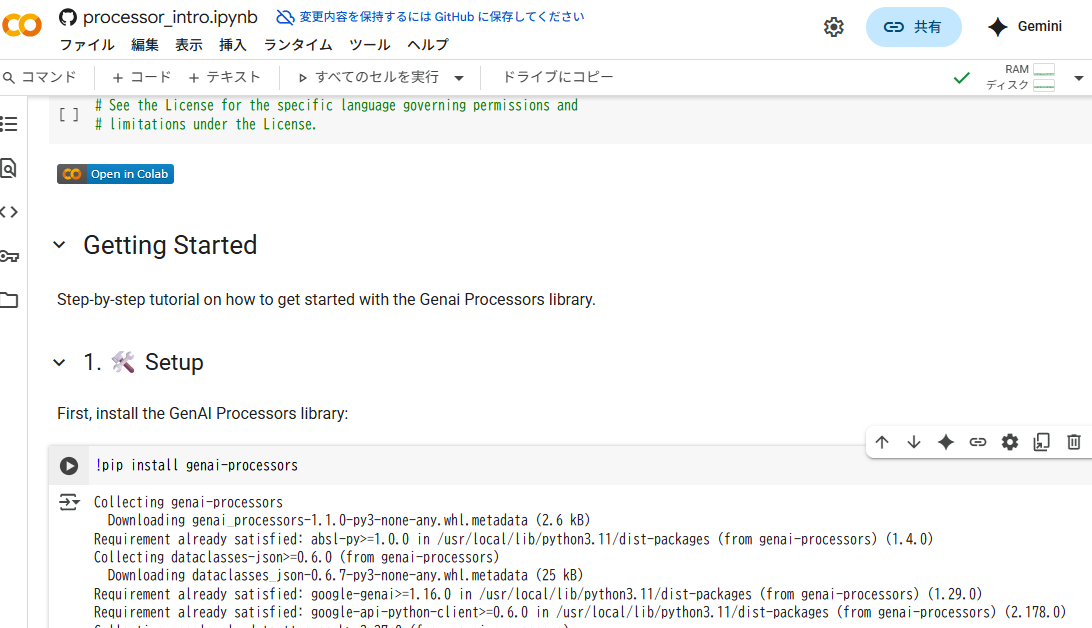

GitHub公式ページを見ると、notebooksディレクトリにipynbファイルがあって、チュートリアルを試せる。Google Colabですぐ開けるので、Colab使ってみるがてら、それで試してみる。

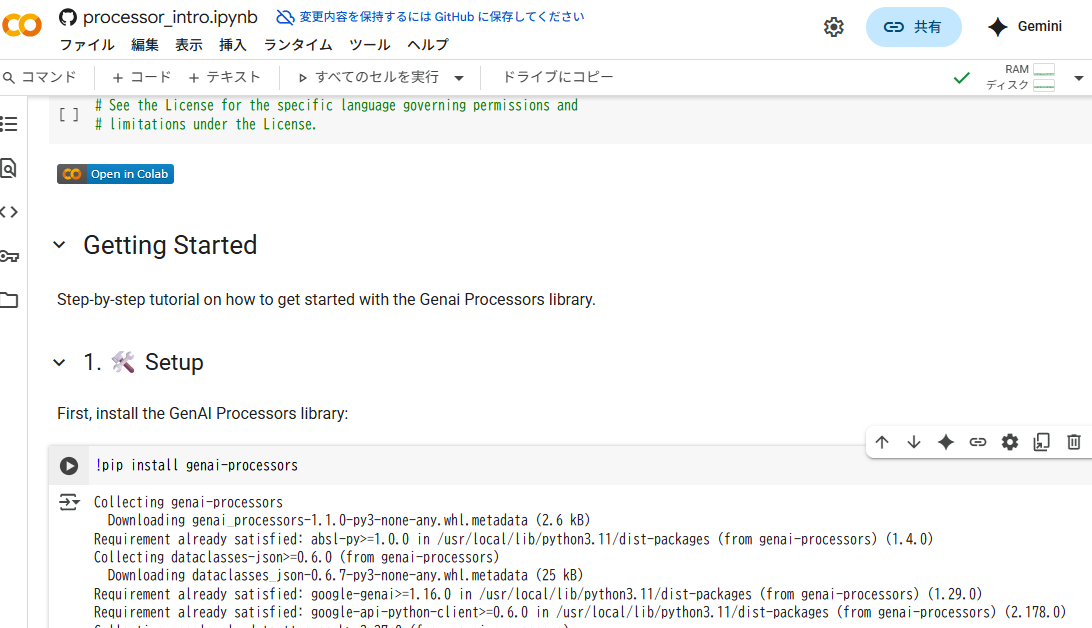

Google Colab開くが、実体はどこにあるのか?GitHubにある?自分のアカウントにコピーしないといけない?

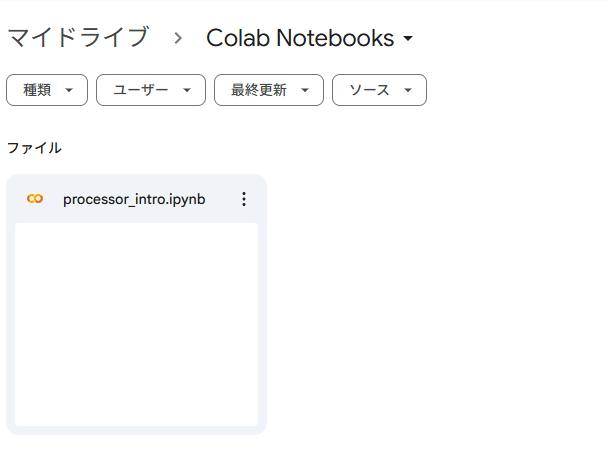

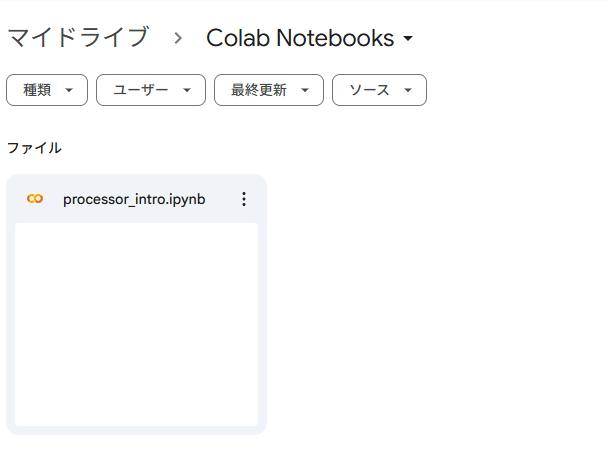

開いたノートブックは、「ドライブにコピー」すれば自分のアカウントに持ってこれるよう

「ドライブにコピー」したら、自分のGoogleドライブに、"Colab Notebooks"フォルダができて、そこにコピーされた。(デフォルトだとファイル名に「のコピー」が付いていたので消した)

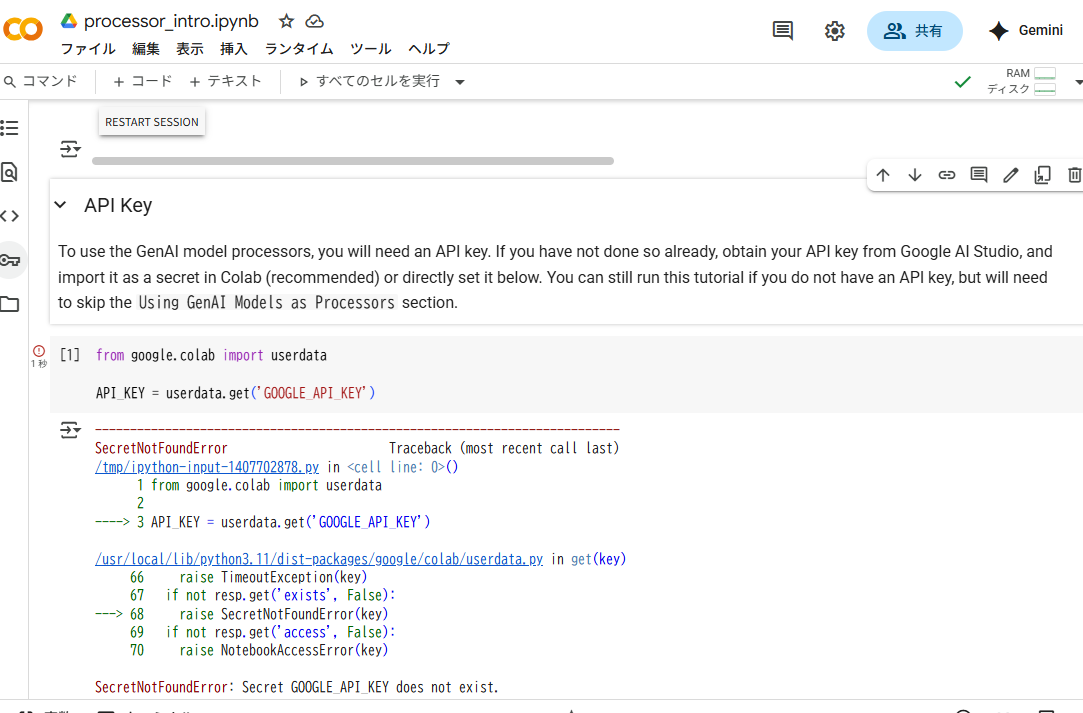

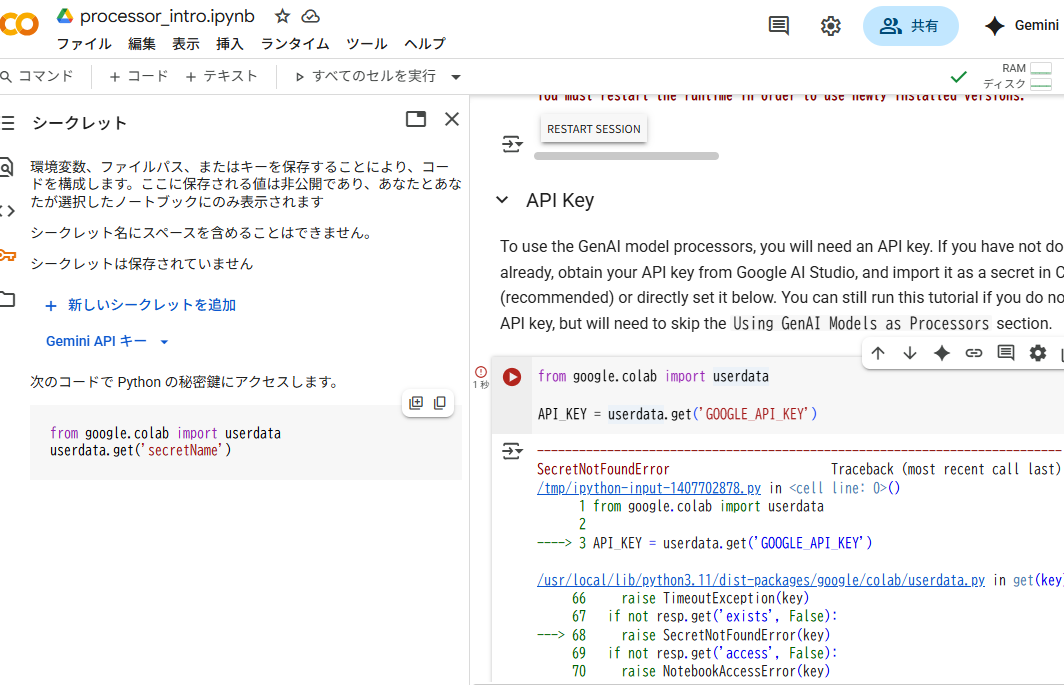

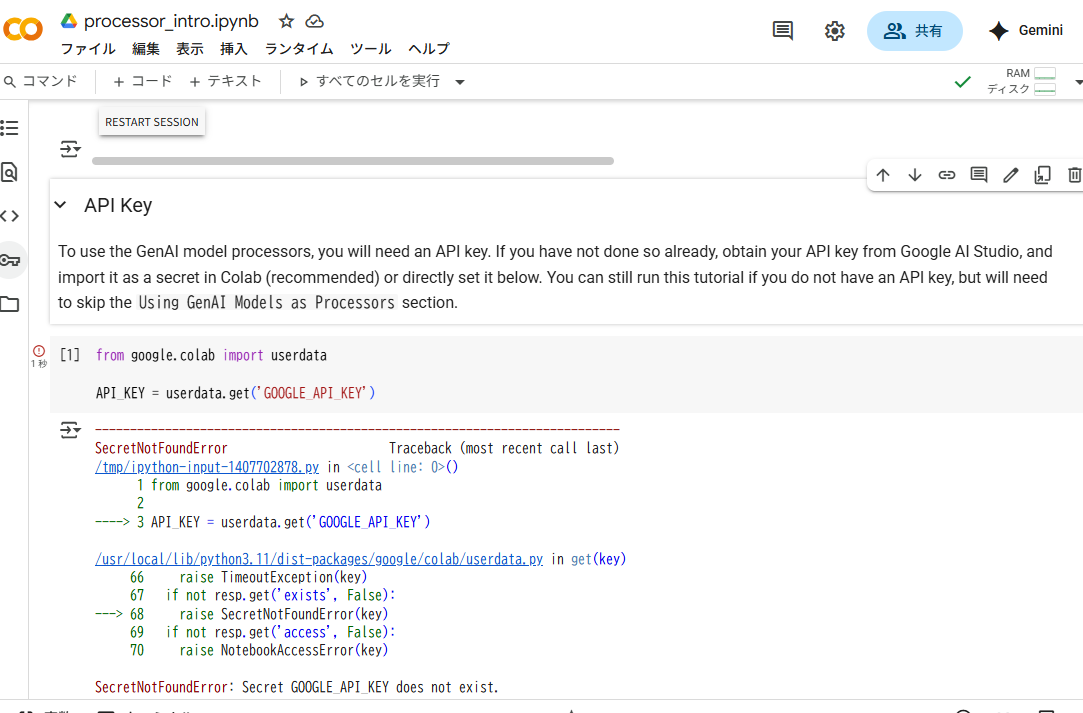

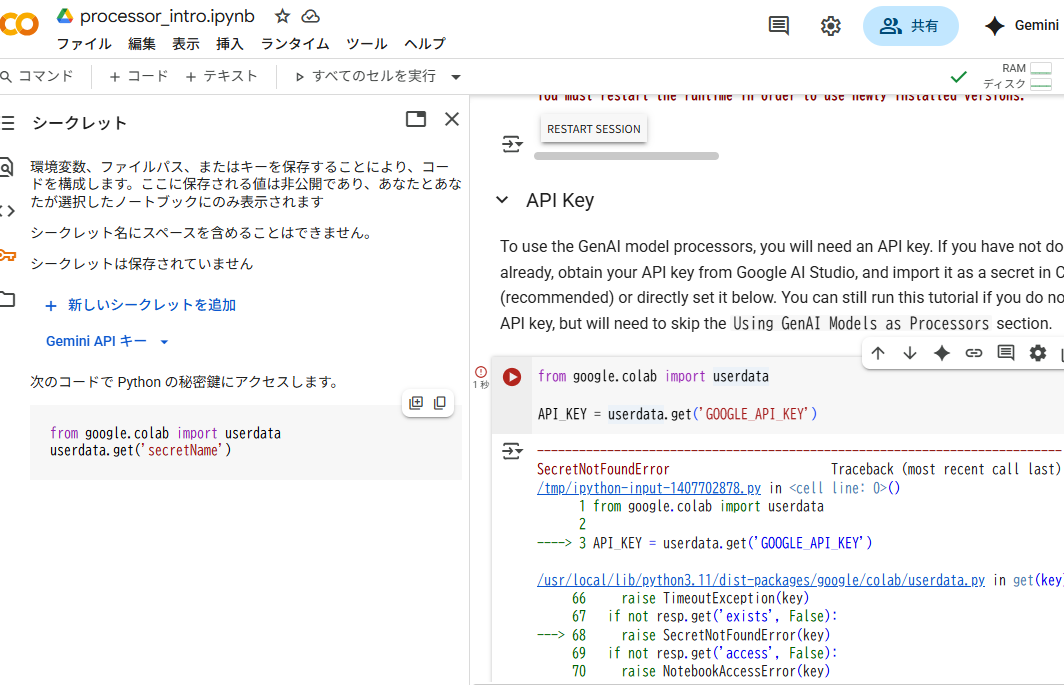

ColabでAPIキー設定

Colab上でのAPIキーの使い方が分からなかったが、左側の"Secret"のアイコンで設定できる

APIキーのコードをコピーせずとも、Googleアカウント経由で取ってこれるよう

asyncの使い方とか、概念の理解が難しい。

自分で新しくノートブック作って、自分の試してみたいことを自分なりに試してみる。

テキスト、音声、画像を入出力できる、GeminiとかのAIモデルを呼べる、ということと思う。

あとは非同期処理で、うまく同時進行できる、という感じか。

以下、Colabの新規ノートブックで

まず環境準備

!pip install genai-processors

長いのでクリックで開閉式

Collecting genai-processors

Downloading genai_processors-1.1.0-py3-none-any.whl.metadata (2.6 kB)

Requirement already satisfied: absl-py>=1.0.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (1.4.0)

Collecting dataclasses-json>=0.6.0 (from genai-processors)

Downloading dataclasses_json-0.6.7-py3-none-any.whl.metadata (25 kB)

Requirement already satisfied: google-genai>=1.16.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (1.31.0)

Requirement already satisfied: google-api-python-client>=0.6.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (2.179.0)

Collecting google-cloud-texttospeech>=2.27.0 (from genai-processors)

Downloading google_cloud_texttospeech-2.27.0-py3-none-any.whl.metadata (9.6 kB)

Requirement already satisfied: google-cloud-speech>=2.33.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (2.33.0)

Requirement already satisfied: httpx>=0.24.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (0.28.1)

Requirement already satisfied: jinja2>=3.0.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (3.1.6)

Requirement already satisfied: opencv-python>=2.0.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (4.12.0.88)

Requirement already satisfied: numpy>=2.0.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (2.0.2)

Collecting pdfrw>=0.4 (from genai-processors)

Downloading pdfrw-0.4-py2.py3-none-any.whl.metadata (32 kB)

Requirement already satisfied: Pillow>=9.0.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (11.3.0)

Requirement already satisfied: termcolor>=3.0.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (3.1.0)

Collecting pypdfium2>=4.30.0 (from genai-processors)

Downloading pypdfium2-4.30.0-py3-none-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (48 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m48.5/48.5 kB[0m [31m3.1 MB/s[0m eta [36m0:00:00[0m

[?25hRequirement already satisfied: xxhash>=3.0.0 in /usr/local/lib/python3.12/dist-packages (from genai-processors) (3.5.0)

Collecting marshmallow<4.0.0,>=3.18.0 (from dataclasses-json>=0.6.0->genai-processors)

Downloading marshmallow-3.26.1-py3-none-any.whl.metadata (7.3 kB)

Collecting typing-inspect<1,>=0.4.0 (from dataclasses-json>=0.6.0->genai-processors)

Downloading typing_inspect-0.9.0-py3-none-any.whl.metadata (1.5 kB)

Requirement already satisfied: httplib2<1.0.0,>=0.19.0 in /usr/local/lib/python3.12/dist-packages (from google-api-python-client>=0.6.0->genai-processors) (0.22.0)

Requirement already satisfied: google-auth!=2.24.0,!=2.25.0,<3.0.0,>=1.32.0 in /usr/local/lib/python3.12/dist-packages (from google-api-python-client>=0.6.0->genai-processors) (2.38.0)

Requirement already satisfied: google-auth-httplib2<1.0.0,>=0.2.0 in /usr/local/lib/python3.12/dist-packages (from google-api-python-client>=0.6.0->genai-processors) (0.2.0)

Requirement already satisfied: google-api-core!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.0,<3.0.0,>=1.31.5 in /usr/local/lib/python3.12/dist-packages (from google-api-python-client>=0.6.0->genai-processors) (2.25.1)

Requirement already satisfied: uritemplate<5,>=3.0.1 in /usr/local/lib/python3.12/dist-packages (from google-api-python-client>=0.6.0->genai-processors) (4.2.0)

Requirement already satisfied: proto-plus<2.0.0,>=1.22.3 in /usr/local/lib/python3.12/dist-packages (from google-cloud-speech>=2.33.0->genai-processors) (1.26.1)

Requirement already satisfied: protobuf!=4.21.0,!=4.21.1,!=4.21.2,!=4.21.3,!=4.21.4,!=4.21.5,<7.0.0,>=3.20.2 in /usr/local/lib/python3.12/dist-packages (from google-cloud-speech>=2.33.0->genai-processors) (5.29.5)

Requirement already satisfied: anyio<5.0.0,>=4.8.0 in /usr/local/lib/python3.12/dist-packages (from google-genai>=1.16.0->genai-processors) (4.10.0)

Requirement already satisfied: pydantic<3.0.0,>=2.0.0 in /usr/local/lib/python3.12/dist-packages (from google-genai>=1.16.0->genai-processors) (2.11.7)

Requirement already satisfied: requests<3.0.0,>=2.28.1 in /usr/local/lib/python3.12/dist-packages (from google-genai>=1.16.0->genai-processors) (2.32.4)

Requirement already satisfied: tenacity<9.2.0,>=8.2.3 in /usr/local/lib/python3.12/dist-packages (from google-genai>=1.16.0->genai-processors) (8.5.0)

Requirement already satisfied: websockets<15.1.0,>=13.0.0 in /usr/local/lib/python3.12/dist-packages (from google-genai>=1.16.0->genai-processors) (15.0.1)

Requirement already satisfied: typing-extensions<5.0.0,>=4.11.0 in /usr/local/lib/python3.12/dist-packages (from google-genai>=1.16.0->genai-processors) (4.15.0)

Requirement already satisfied: certifi in /usr/local/lib/python3.12/dist-packages (from httpx>=0.24.0->genai-processors) (2025.8.3)

Requirement already satisfied: httpcore==1.* in /usr/local/lib/python3.12/dist-packages (from httpx>=0.24.0->genai-processors) (1.0.9)

Requirement already satisfied: idna in /usr/local/lib/python3.12/dist-packages (from httpx>=0.24.0->genai-processors) (3.10)

Requirement already satisfied: h11>=0.16 in /usr/local/lib/python3.12/dist-packages (from httpcore==1.*->httpx>=0.24.0->genai-processors) (0.16.0)

Requirement already satisfied: MarkupSafe>=2.0 in /usr/local/lib/python3.12/dist-packages (from jinja2>=3.0.0->genai-processors) (3.0.2)

Requirement already satisfied: sniffio>=1.1 in /usr/local/lib/python3.12/dist-packages (from anyio<5.0.0,>=4.8.0->google-genai>=1.16.0->genai-processors) (1.3.1)

Requirement already satisfied: googleapis-common-protos<2.0.0,>=1.56.2 in /usr/local/lib/python3.12/dist-packages (from google-api-core!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.0,<3.0.0,>=1.31.5->google-api-python-client>=0.6.0->genai-processors) (1.70.0)

Requirement already satisfied: grpcio<2.0.0,>=1.33.2 in /usr/local/lib/python3.12/dist-packages (from google-api-core[grpc]!=2.0.*,!=2.1.*,!=2.10.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,!=2.6.*,!=2.7.*,!=2.8.*,!=2.9.*,<3.0.0,>=1.34.1->google-cloud-speech>=2.33.0->genai-processors) (1.74.0)

Requirement already satisfied: grpcio-status<2.0.0,>=1.33.2 in /usr/local/lib/python3.12/dist-packages (from google-api-core[grpc]!=2.0.*,!=2.1.*,!=2.10.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,!=2.6.*,!=2.7.*,!=2.8.*,!=2.9.*,<3.0.0,>=1.34.1->google-cloud-speech>=2.33.0->genai-processors) (1.71.2)

Requirement already satisfied: cachetools<6.0,>=2.0.0 in /usr/local/lib/python3.12/dist-packages (from google-auth!=2.24.0,!=2.25.0,<3.0.0,>=1.32.0->google-api-python-client>=0.6.0->genai-processors) (5.5.2)

Requirement already satisfied: pyasn1-modules>=0.2.1 in /usr/local/lib/python3.12/dist-packages (from google-auth!=2.24.0,!=2.25.0,<3.0.0,>=1.32.0->google-api-python-client>=0.6.0->genai-processors) (0.4.2)

Requirement already satisfied: rsa<5,>=3.1.4 in /usr/local/lib/python3.12/dist-packages (from google-auth!=2.24.0,!=2.25.0,<3.0.0,>=1.32.0->google-api-python-client>=0.6.0->genai-processors) (4.9.1)

Requirement already satisfied: pyparsing!=3.0.0,!=3.0.1,!=3.0.2,!=3.0.3,<4,>=2.4.2 in /usr/local/lib/python3.12/dist-packages (from httplib2<1.0.0,>=0.19.0->google-api-python-client>=0.6.0->genai-processors) (3.2.3)

Requirement already satisfied: packaging>=17.0 in /usr/local/lib/python3.12/dist-packages (from marshmallow<4.0.0,>=3.18.0->dataclasses-json>=0.6.0->genai-processors) (25.0)

Requirement already satisfied: annotated-types>=0.6.0 in /usr/local/lib/python3.12/dist-packages (from pydantic<3.0.0,>=2.0.0->google-genai>=1.16.0->genai-processors) (0.7.0)

Requirement already satisfied: pydantic-core==2.33.2 in /usr/local/lib/python3.12/dist-packages (from pydantic<3.0.0,>=2.0.0->google-genai>=1.16.0->genai-processors) (2.33.2)

Requirement already satisfied: typing-inspection>=0.4.0 in /usr/local/lib/python3.12/dist-packages (from pydantic<3.0.0,>=2.0.0->google-genai>=1.16.0->genai-processors) (0.4.1)

Requirement already satisfied: charset_normalizer<4,>=2 in /usr/local/lib/python3.12/dist-packages (from requests<3.0.0,>=2.28.1->google-genai>=1.16.0->genai-processors) (3.4.3)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.12/dist-packages (from requests<3.0.0,>=2.28.1->google-genai>=1.16.0->genai-processors) (2.5.0)

Collecting mypy-extensions>=0.3.0 (from typing-inspect<1,>=0.4.0->dataclasses-json>=0.6.0->genai-processors)

Downloading mypy_extensions-1.1.0-py3-none-any.whl.metadata (1.1 kB)

Requirement already satisfied: pyasn1<0.7.0,>=0.6.1 in /usr/local/lib/python3.12/dist-packages (from pyasn1-modules>=0.2.1->google-auth!=2.24.0,!=2.25.0,<3.0.0,>=1.32.0->google-api-python-client>=0.6.0->genai-processors) (0.6.1)

Downloading genai_processors-1.1.0-py3-none-any.whl (219 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m219.9/219.9 kB[0m [31m10.2 MB/s[0m eta [36m0:00:00[0m

[?25hDownloading dataclasses_json-0.6.7-py3-none-any.whl (28 kB)

Downloading google_cloud_texttospeech-2.27.0-py3-none-any.whl (189 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m189.4/189.4 kB[0m [31m11.3 MB/s[0m eta [36m0:00:00[0m

[?25hDownloading pdfrw-0.4-py2.py3-none-any.whl (69 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m69.5/69.5 kB[0m [31m3.9 MB/s[0m eta [36m0:00:00[0m

[?25hDownloading pypdfium2-4.30.0-py3-none-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.8 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m2.8/2.8 MB[0m [31m50.4 MB/s[0m eta [36m0:00:00[0m

[?25hDownloading marshmallow-3.26.1-py3-none-any.whl (50 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m50.9/50.9 kB[0m [31m3.3 MB/s[0m eta [36m0:00:00[0m

[?25hDownloading typing_inspect-0.9.0-py3-none-any.whl (8.8 kB)

Downloading mypy_extensions-1.1.0-py3-none-any.whl (5.0 kB)

Installing collected packages: pdfrw, pypdfium2, mypy-extensions, marshmallow, typing-inspect, dataclasses-json, google-cloud-texttospeech, genai-processors

Successfully installed dataclasses-json-0.6.7 genai-processors-1.1.0 google-cloud-texttospeech-2.27.0 marshmallow-3.26.1 mypy-extensions-1.1.0 pdfrw-0.4 pypdfium2-4.30.0 typing-inspect-0.9.0

from google.colab import userdata

API_KEY = userdata.get('GOOGLE_API_KEY')

まず簡単にGeminiを呼び出して、テキスト入力、テキスト出力させる。

from genai_processors.core import genai_model

from google.genai import types as genai_types

genai_processor = genai_model.GenaiModel(

api_key=API_KEY,

model_name="gemini-2.0-flash",

generate_content_config=genai_types.GenerateContentConfig(temperature=0.7),

)

入力には、genai_processorsのstreamsを使わないといけないようなので、それで入力を構築する。

入力は単語で区切ったりする?文章でも大丈夫?確認してみる。

from genai_processors import streams

input_parts = ["Hello", "World"]

input_stream = streams.stream_content(input_parts)

async使うのにimport asyncioしないといけない?

import asyncio

async for part in genai_processor(input_stream):

print(part.text)

Hello

World! How can I help you today?

input_parts = ["Hello. What's your name?"]

input_stream = streams.stream_content(input_parts)

async for part in genai_processor(input_stream):

print(part.text)

I

am a large

language model, trained by Google. I don't have a name in the

traditional sense. You can just call me Google's AI.

input_parts = ["Hello.", "What's", "your", "name?"]

input_stream = streams.stream_content(input_parts)

async for part in genai_processor(input_stream):

print(part.text)

I

am a large

language model, trained by Google. I don't have a personal name.

どう区切っても大丈夫なよう。

以上

次は音声か画像の入出力やってく。

ずっとColab上でやってるのでいいか?アプリ化しようと思ったらローカルで動かしたほうがいいかも。